Google Lens is rapidly becoming one of the most versatile tools on iPhones, and its latest update marks a major leap forward for visual search on iOS. With a rollout that brings “Circle to Search” (now branded as Screen Search) and AI-powered overviews to Apple’s ecosystem, Google is eliminating friction between what you see and what you can learn.

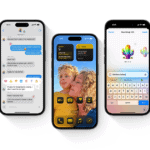

This update doesn’t just tweak the user interface—it changes the way iPhone users interact with images, video, and real-time information on their screens. Instead of capturing screenshots or jumping between apps, users can now perform instant searches with simple gestures.

New: Screen Search Brings Gesture-Based Visual Queries to iOS

Previously exclusive to Android flagships like the Pixel and Galaxy S24 series, Screen Search is now available on iPhones via Chrome 133 and the Google app. It lets users:

- Circle, tap, or highlight any part of the screen to search what’s there.

- Skip screenshots entirely—Lens pulls contextual results without the need to switch apps.

- Tap the new Lens icon in the Chrome address bar (coming soon) for even faster access.

This visual-first interaction is part of Google’s push to make search feel less like typing into a box and more like interacting with the world naturally. It’s also a response to growing user demand for on-screen AI tools that bridge images, live content, and real-world objects.

AI Overviews Now Appear in Lens Results

One of the most compelling features in this update is the integration of AI-generated overviews into Google Lens results:

- When you search with Lens, Google can now summarize what you’re seeing using Gemini-powered AI.

- These overviews pull from Google’s Knowledge Graph, combining visual recognition with natural language generation to explain context, background, and related topics.

This means if you scan a historical building, a painting, or a product label, you’ll receive real-time summaries and suggestions—no extra input needed. It’s like having a tour guide or product expert embedded in your phone.

AI Overviews are live for English-language users globally, and Google says desktop versions are coming later this year.

Coming Soon: Google Lens for YouTube Shorts

Google is also expanding Lens into video content. A beta feature launching later this summer will allow iPhone users to:

- Tap the Lens icon directly in YouTube Shorts to identify people, locations, objects, or text.

- Translate captions or signs seen in the video without leaving the app.

- Get search results in real-time while watching content—no more pausing to Google it manually.

This move signals Google’s intent to blur the lines between search, content, and entertainment. With more than 70 billion daily views on Shorts, the integration of Lens here positions it as a next-gen content layer for social media, not just a utility app.

Google has emphasized that the tool won’t perform facial recognition to protect privacy. However, it can surface metadata about places and products, so creators should be aware of what’s identifiable in their videos.

Key Rollout Timeline

| Date | Feature | Status |

|---|---|---|

| February 19, 2025 | Screen Search + AI Overviews launch on Chrome & Google app | ✅ Live Now |

| Summer 2025 | Google Lens for YouTube Shorts (beta) | ⏳ Coming Soon |

✅ What It Means for iPhone Users

- Faster, smarter searches: No more typing or screenshotting—just gesture and go.

- AI-powered insight: Learn about art, landmarks, products, and more in real time.

- Integrated experience: Soon, visual search won’t stop at the web—it’ll extend to video, too.

This evolution shows how Google is embedding search directly into the user interface layer of iOS—not just within apps, but across the operating system’s visual experience. It’s a bold move that sets the stage for how AI and search will converge in the mobile era.

Key Takeaways

- Google Lens now allows iPhone users to search screen content instantly using gestures like drawing, tapping, or highlighting

- The Circle to Search tool brings AI-powered visual search capabilities to iOS devices for English-language users in supported regions

- The updated Google app integrates Lens functionality to help users explore the world around them through their phone’s camera

Google Lens Integration with iPhone

Google Lens has become a powerful visual search tool accessible to iPhone users. It allows iOS users to search what they see through their camera or existing photos, identifying objects and providing relevant information from the web.

Compatibility and Installation

Google Lens works on iPhones running iOS 11 or later. Users don’t need to download a separate app specifically for Lens, as it comes integrated within the Google app and Google Chrome browser.

To get started, iPhone users should download the Google app from the App Store. The app is free and provides full access to Google Lens functionality. Alternatively, users can access Lens features through the Google Chrome browser if they prefer using it for web searches.

Regular updates improve the tool’s capabilities, so keeping the Google app updated ensures access to the latest Lens features. Google has made Lens globally available for iPhones, expanding its reach beyond Android devices.

Accessing Google Lens on iOS Devices

There are multiple ways to access Google Lens on an iPhone. The most common method is through the Google app. Users should open the app and look for the Lens icon next to the search bar or the camera icon in the search box.

In Chrome, users can tap the Google Lens icon in the search bar. A newer feature allows users to circle content directly on their iPhone’s screen to search for what appears on-screen.

To analyze existing photos, users can:

- Open the Google app

- Tap the Lens icon

- Select a photo from their gallery

For real-time searches, tap the camera icon and point the phone at the object of interest.

User Interface and Navigation

The Google Lens interface on iPhone is designed to be intuitive and user-friendly. When activated, the camera view displays a clean layout with minimal buttons to avoid distraction.

At the bottom of the screen, users will find various search modes represented by icons. These include options for:

- Text recognition and translation

- Shopping

- Places identification

- Food searches

- Object identification

Results appear as cards at the bottom of the screen that users can swipe up to expand. Tapping on these cards provides more detailed information. Users can also save search results for later reference.

The navigation is gesture-based, making it easy to dismiss results by swiping down or return to the main Google app by tapping the back button in the top left corner.

Features and Capabilities of Google Lens

Google Lens transforms how iPhone users interact with the world through their camera. This powerful visual search tool combines AI technology with image recognition to help users identify objects, translate text, shop, search, and access information in new ways.

Real-Time Object Identification

Google Lens excels at identifying objects in real-time through the iPhone camera. Users simply point their camera at an item, and the app quickly recognizes what it’s seeing.

The technology can identify plants, animals, landmarks, products, and many other objects. For example, pointing Lens at a flower can provide its species name and care information.

This feature uses advanced AI to analyze visual elements and match them with Google’s vast database of images. The identification happens almost instantly, giving users immediate information about what they’re looking at.

Object identification works in various lighting conditions and can even recognize partially visible items. This makes it helpful for learning about unfamiliar objects you encounter in daily life.

Text Translation and Recognition

Google Lens offers powerful text capabilities that make reading and understanding foreign languages easier. When pointed at text in another language, Lens can translate it in real-time right on your screen.

The app recognizes both printed and handwritten text across numerous languages. This makes it valuable for traveling, reading foreign menus, or understanding international documents.

Beyond translation, Lens can extract text from images, allowing users to copy text from photos, screenshots, or signs. This text can then be pasted into other apps or searched online.

For students, Lens helps with solving math problems by recognizing equations. It can also look up unfamiliar words by simply highlighting them through the camera view.

Shopping with Visual Search

Google Lens transforms shopping by allowing iPhone users to search for products they see in the real world. By taking a photo of an item, Lens identifies similar products available for purchase online.

The tool recognizes clothing, furniture, home decor, and many other consumer goods. It provides information about where to buy them and compares prices across retailers.

Users can find exact matches or visually similar items that fit their style preferences. This feature is particularly helpful when you spot something you like but don’t know the brand or product name.

When shopping in stores, Lens can scan barcodes to provide product reviews and additional information. It helps consumers make informed purchasing decisions based on visual identification.

Image Search Enhancement

Google Lens enhances how iPhone users search with images. The newest feature allows users to search for anything they see on their screen by drawing over, highlighting, or tapping what interests them.

This visual search works across photos, screenshots, websites, and apps. Users no longer need to type complex descriptions when they can simply circle or tap what they want to know more about.

The tool integrates with the iPhone camera to search based on real-time visual input. This creates a seamless experience between seeing something interesting and learning more about it.

Image search is now more interactive and intuitive. Users can be more specific about which parts of an image they want information on, leading to more accurate search results.

Augmented Reality Information Overlays

Google Lens uses augmented reality (AR) to display information directly over what users see through their camera. This creates an interactive experience where digital information appears layered on the physical world.

When viewing landmarks, Lens can overlay historical information, visitor details, and interesting facts. This transforms ordinary sightseeing into an educational experience.

In restaurants, pointing Lens at a menu can highlight popular dishes, ingredients, and reviews from other diners. The AR overlays appear contextually relevant to what the camera sees.

For learning environments, Lens can add interactive elements to textbooks or educational materials. This brings static content to life with additional resources and visual explanations.

Frequently Asked Questions

Google Lens for iPhone has evolved with several new features and improvements. Users often have questions about how to use this visual search tool effectively on iOS devices.

What are the new features added to Google Lens on iPhone recently?

Recent updates to Google Lens for iPhone include AI Overviews that provide more context about what you’re searching for visually. The app now offers quick screen searches, making it easier to explore web content related to images.

Google has also improved the visual recognition algorithms to better identify objects in photos. These enhancements help users get more accurate and detailed information about the items they scan.

How can I download the Google Lens application for my iPhone?

Google Lens is available for free on the App Store. Users can simply search for “Google Lens” in the App Store and tap the download button to install it on their iPhone.

The application is compatible with most iPhone models running recent iOS versions. After downloading, users will need to grant the app permission to access their camera.

Is there a way to use Google Lens to search online by image on an iPhone?

Yes, iPhone users can search by image using Google Lens in several ways. They can take a photo directly through the app or select an existing image from their photo library.

Users can also use Google Lens within the Google app by tapping on the Lens icon. This allows them to quickly search for information about objects, text, or places in images.

Has Google released a version of Google Lens specifically for iPhone users?

Google has optimized Lens for iOS devices, ensuring it works well within the iPhone ecosystem. While the core functionality remains similar to the Android version, the interface has been adapted to follow iOS design patterns.

Some users of newer iPhones, such as the iPhone 13 and iPhone 15 Pro, have reported specific issues with camera focusing. Google continues to update the app to address these iOS-specific challenges.

Can Google Lens for iPhone be integrated with other iOS applications?

Google Lens can work alongside other iOS applications through the share feature. Users can share images from their Photos app directly to Google Lens for analysis.

The tool can also be accessed through the Google app and Google Photos on iOS. This integration makes it convenient to use Lens features within Google’s ecosystem on iPhone.

What improvements have been made to the image recognition capabilities of Google Lens for iOS?

Google has enhanced the image recognition technology in Lens for iOS to better identify objects, text, and landmarks. The system now provides more accurate search results and contextual information.

Some iPhone 15 Pro users have reported issues with camera focus in the app. According to search results, the app sometimes struggles with automatically focusing when recognizing items on iOS 18.1.

Google regularly updates the app to improve recognition accuracy and fix bugs. These improvements help make visual searches more reliable and useful for everyday tasks.