The rapid ascent of ChatGPT has captured global attention, demonstrating the vast potential of generative AI to transform how we engage with technology and information. While its benefits are clear, it’s crucial to address the concerns surrounding its growing influence.

By promoting responsible development, transparency, and open dialogue, we can leverage AI’s power for societal improvement while mitigating potential risks. OpenAI’s ChatGPT’s emergence has sparked a contentious debate about the impact of generative AI, especially regarding academic integrity, ethical concerns, and the responsibilities of AI developers and users in society.

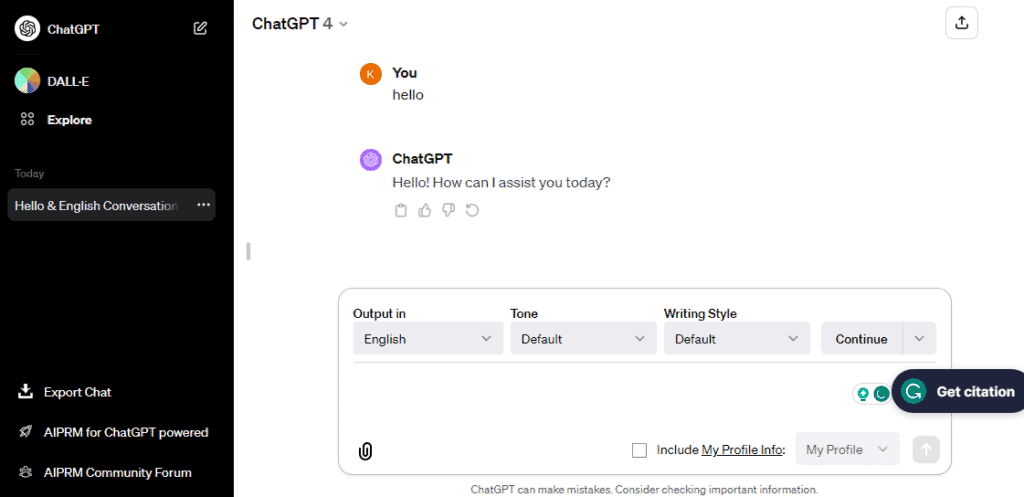

The ChatGPT Phenomenon

ChatGPT’s meteoric rise to prominence has ignited a global conversation about the far-reaching implications of generative AI. While this technology unlocks new possibilities for creativity and productivity, it also raises concerns about its potential misuse and unintended consequences. Let’s delve deeper into this complex landscape.

The Power and Promise of Generative AI

Generative AI, exemplified by models like ChatGPT, has demonstrated remarkable capabilities in generating human-like text, images, and even videos. This technology holds immense promise in various fields, including:

- Creative Content Generation: From writing assistance to generating artwork and music, generative AI can fuel creativity and inspire new forms of expression.

- Productivity Enhancement: AI-powered tools can automate routine tasks, freeing up human time for more strategic and creative endeavors.

- Problem-Solving and Innovation: Generative AI can assist in complex problem-solving, data analysis, and the development of new solutions across industries.

The Concerns and Challenges

Despite its potential benefits, generative AI also raises valid concerns that demand careful consideration:

- Misinformation and Disinformation: The ease with which AI can generate convincing content poses a serious threat to the spread of misinformation and disinformation.

- Job Displacement: As AI automates tasks across various sectors, there’s growing anxiety about its potential impact on employment and the workforce.

- Bias and Discrimination: AI models trained on biased data can perpetuate and amplify existing societal prejudices, leading to discriminatory outcomes.

- Privacy and Security: The collection and use of personal data to train and operate AI systems raise concerns about privacy breaches and data misuse.

- Lack of Transparency and Accountability: The complexity of AI models often makes it difficult to understand their decision-making processes, raising questions about transparency and accountability.

Addressing the Challenges

| Challenge | Potential Solutions |

|---|---|

| Misinformation & Disinformation | Develop robust AI detection tools. Promote media literacy and critical thinking. Implement responsible AI usage guidelines. |

| Job Displacement | Invest in education and training for new skills. Explore policies for a just transition. Foster collaboration between humans and AI. |

| Bias & Discrimination | Ensure diverse and representative training data. Implement bias detection and mitigation techniques. Promote ethical AI development practices. |

| Privacy & Security | Strengthen data protection regulations. Implement privacy-preserving AI techniques. Increase transparency in data collection and usage. |

| Lack of Transparency & Accountability | Develop explainable AI models. Establish clear guidelines for AI accountability. Encourage ongoing research into AI ethics and governance. |

Short Summary:

- ChatGPT’s rise highlights significant ethical and practical challenges in academia and industries.

- Concerns include the potential for misuse in academic settings and the risks of diminished human-AI interaction.

- Stakeholders are urged to adapt and develop responsible guidelines for the integration of generative AI technologies.

How ChatGPT is Growing In Influence

As technology advances, generative AI models like OpenAI’s ChatGPT are increasingly integrated into various sectors, including education, business, and technology. With its ability to generate human-like text, ChatGPT poses unique opportunities and challenges for academic integrity, ethical considerations, and the responsibilities of developers. The rapid consensus on its capabilities has raised alarms regarding potential misuse and societal impact, sparking discussions among educators, researchers, and policymakers.

A Transformative Force in AI

ChatGPT is a major advancement in AI language models, with applications ranging from content generation to personalized learning experiences. It was released on November 30, 2022, and quickly gained popularity for producing coherent and contextually relevant responses across various domains. This technology is not only transforming communication but also changing the landscape of research and creativity. According to Damian Okaibedi Eke from the School of Computer Science and Informatics at De Montfort University, ChatGPT is part of the Generative Pre-trained Transformer (GPT) family, setting itself apart from earlier models. It has been observed that ChatGPT excels in interactive dialogues and complex information explanations, distinguishing itself from previous models like GPT-3. As Eke mentioned, “ChatGPT builds upon the foundations laid by its predecessors but with a refined focus on conversational applications.”

Widespread Applications of ChatGPT

This versatile tool has found applications across various sectors, such as customer service, healthcare, education, and even legal settings. For example, recent studies reveal that ChatGPT passed exams at reputable institutions, indicating an impressive level of comprehension and response quality, which was corroborated by Choi et al. (2023) in their analysis of its performance in law school settings.

Challenges Surrounding ChatGPT

Despite its advantages, the rapid adoption of ChatGPT brings forward pressing challenges, particularly in the academic realm. Concerns about academic dishonesty and integrity are at the forefront of discussions. The International Centre for Academic Integrity defines academic integrity as a commitment to values such as honesty, trust, and responsibility. The potential for students to pass off AI-generated content as their own creates an ethical dilemma that has yet to be effectively addressed in academic policies.

“At the moment, it’s looking a lot like the end of essays as an assignment for education,” stated an anonymous academic, highlighting the pressing need for institutions to adapt to these new challenges.

Potential Threats to Academic Integrity

The concerns of forgery and misrepresentation in academic work due to AI tools like ChatGPT echo fears associated with traditional cheating methods. The ease of access and interaction with ChatGPT provides students the ability to receive tailored responses, thus raising questions about the authenticity of student submissions. Eke warns, “If students begin outsourcing their writing to ChatGPT, universities face a significant shift in evaluating student performance.”

Existing Mitigation Efforts

Though institutions employ tools like Turnitin and Unicheck to uphold academic integrity, these tools are failing to adapt to the unique challenges posed by generative AI. Most plagiarism detection systems have not yet integrated AI-generated content as a specific category of concern. Thus, the urgent need for institutions to clarify their academic integrity policies has arisen to address the potential for misuse of AI in academic writing.

Future Directions: Balancing Innovation and Responsibility

As ChatGPT evolves, institutions must not only recognize its capabilities but also proactively seek methods of incorporating it into teaching and research frameworks. Emphasizing responsible use involves establishing clear guidelines for acknowledging AI when utilized in academic projects.

Several actionable measures can be undertaken:

- Create educational programs targeting both faculty and students about generative AI and its implications for academic integrity.

- Update academic integrity policies to include specific guidelines regarding the use of AI tools and their acknowledgment in research outputs.

- Collaborate with AI developers to improve detection tools that can effectively identify AI-generated texts in assessments.

- Develop training sessions for educators to ensure they understand how to adapt assessments in a way that minimizes dependencies on AI for brainstorming and writing.

The Role of AI in Redefining Academia

Generative AI not only creates challenges but also represents an opportunity for transformation in higher education. Instead of viewing tools like ChatGPT as threats, educators can embrace them as innovative mediums that enhance the learning experience. For instance, refining essay assignments into project-based evaluations may engage students in critical thinking while minimizing dependency on AI-generated writing.

Engaging Stakeholders for a Collaborative Approach

To address these challenges comprehensively, input from a range of stakeholders is essential. This includes collaboration between academic institutions, policymakers, and AI technology developers. Each group must participate in discussions to ensure alignment on how to responsibly integrate AI in educational frameworks while safeguarding academic integrity.

The Future Of Ai

The emergence of ChatGPT highlights the changing relationship between technology and academia. While its ability to produce human-like text raises concerns for academic honesty, it also presents unique educational opportunities. Finding a balance between using AI to improve learning experiences and upholding ethical standards is crucial.

As new generations of students interact with various technologies, it’s important for educators to reconsider how they design assessments. This requires a reassessment of traditional teaching methods in light of AI progress. All stakeholders in education need to come together to develop an approach that encourages innovation while maintaining ethical standards.

“We must not only embrace the capabilities of generative AI but also chart a responsible path towards its integration in educational practices to safeguard the values of academic integrity,” asserts Eke, calling for collective action from all stakeholders involved.

The path forward requires thoughtful engagement across societal sectors, emphasizing training, policy refinement, and collaboration to navigate this new era effectively. The interplay between technological innovation and ethical use will shape the future landscape of academia and the broader implications for society.