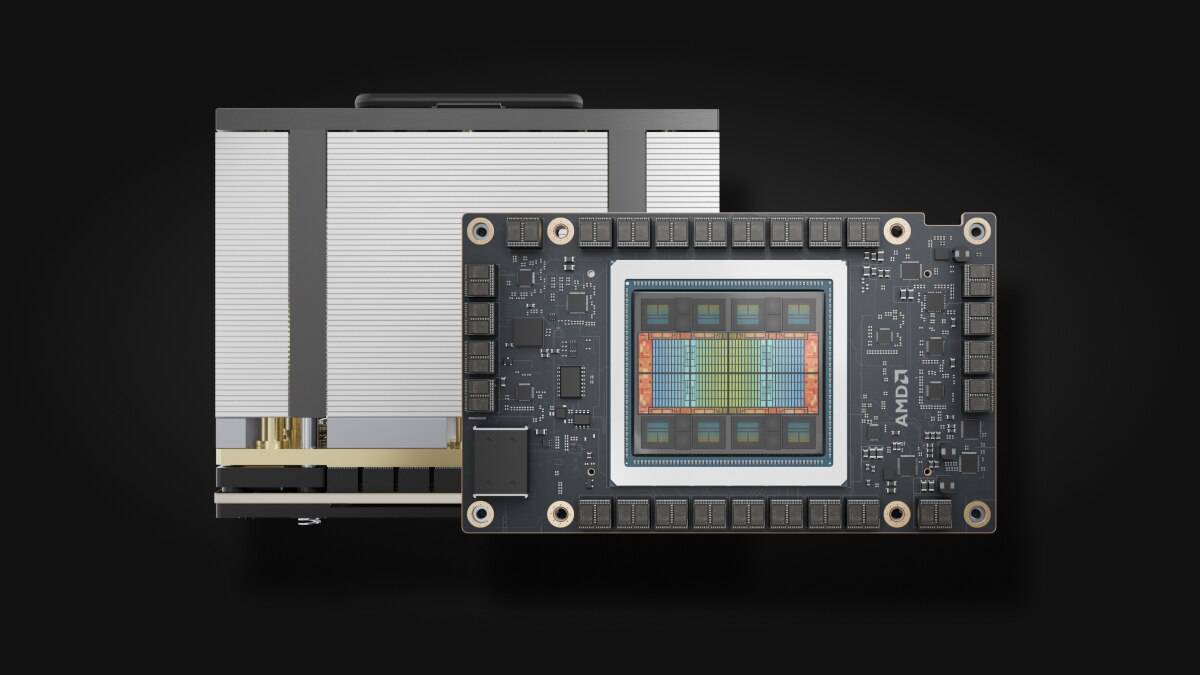

AMD has introduced a new Ai GPU called Instinct MI325X, which is the first Ai chip to offer 256GB of memory and is targetting the industry-leading market share NVIDIA has carved out for itself for years now. This Ai GPU offers impressive memory capacity and performance with AMD claiming that it beats Nvidia’s ultra-popular H200 chip by 20-40% in several key performance areas. AMD also announced that they will be coming out with the MI355X GPU next year; an Ai GPU that will be built on CDNA 4 architecture and feature 288GB of HBM3E memory.

As the demand for advanced AI processing solutions grows, AMD’s strategic approach to high memory capacities and performance enhancements will influence the landscape of data centers and artificial intelligence. We’re still waiting to see what Intel’s response to all of this will be and if they too can put some pressure on NVIDIA to bring down pricing. The Ai chip market is a very big one but NVIDIA’s lead in terms of power and performance has been difficult to compete with.

AMD Mi325X: A New Powerhouse for AI and Cloud Workloads

Mi325X Accelerator Overview

AMD has just launched the Mi325X accelerator, a powerful new hardware solution designed to boost performance in AI inference and cloud workloads. This accelerator is built around AMD’s cutting-edge CDNA™ 3 architecture and boasts impressive specs aimed at delivering exceptional performance and efficiency.

Key Features and Benefits

- High-Performance AI Inference: The Mi325X excels in AI inference tasks, making it ideal for applications like natural language processing, image recognition, and recommendation systems. It delivers exceptional performance per watt, allowing for cost-effective deployment in large-scale AI environments.

- Optimized for Cloud Workloads: This accelerator isn’t just for AI. It’s also optimized for various cloud workloads, including video transcoding, database processing, and high-performance computing (HPC). This versatility makes it a valuable asset for cloud providers and enterprises.

- Advanced Technology: The Mi325X leverages AMD’s advanced technologies, such as Infinity Cache™ and 4th Gen Infinity Fabric™, to maximize memory bandwidth and interconnect performance. This translates to faster processing and reduced latency in demanding applications.

- Enhanced Security Features: Security is a top priority in today’s data centers. The Mi325X incorporates robust security features to protect sensitive data and ensure the integrity of AI and cloud workloads.

AMD Mi325X Specifications:

| Feature | Specification |

|---|---|

| Architecture | AMD CDNA™ 3 |

| Compute Units | 128 |

| Peak FP32 Performance | Up to 47.9 TFLOPS |

| Memory Capacity | 32GB HBM3 |

| Memory Bandwidth | Up to 1.3 TB/s |

| Interconnect | 4th Gen Infinity Fabric™ |

| Form Factor | PCIe® 5.0 x16 |

Target Applications and Impact

The Mi325X is poised to make a significant impact in various fields:

- Cloud Computing: Cloud service providers can leverage the Mi325X to accelerate their AI services, offering faster and more efficient solutions to their customers.

- Enterprise AI: Businesses can deploy the Mi325X to power their internal AI applications, such as fraud detection, customer service automation, and predictive analytics.

- Research and Development: Researchers can utilize the Mi325X’s high performance to accelerate their AI model training and development, leading to faster breakthroughs in areas like healthcare and climate science.

With its impressive performance, efficiency, and security features, the AMD Mi325X is set to become a key player in the evolving landscape of AI and cloud computing.

Short Summary:

- Introduction of AMD’s new MI325X GPU featuring 288GB of HBM3E memory.

- Significant performance improvements over Nvidia’s H200, including increased memory bandwidth.

- Future product roadmap includes the MI350 series expected in 2025 and MI400 series in 2026.

On June 2, 2024, during the Computex trade show in Taipei, Taiwan, AMD unveiled its latest innovation in the field of data center graphics processing with the introduction of the Instinct MI325X GPU. This cutting-edge accelerator is designed to enhance artificial intelligence (AI) workloads and machine learning applications, emphasizing AMD’s intention to solidify its position against competitors like Nvidia.

The MI325X GPU stands out due to its remarkable specifications, which include 288GB of HBM3E memory and a maximum memory bandwidth of 6 terabytes per second (TB/s). These enhancements not only double the memory capacity of AMD’s previous models but also outstrip Nvidia’s H200 GPU, which has only 141GB of memory and a bandwidth of 4.8TB/s. Tom’s Hardware reported that “the MI325X delivers 30% faster computing speed compared to NVIDIA’s H200,” marking a significant leap in performance.

“With the new AMD Instinct accelerators… AMD underscores the critical expertise to build and deploy world-class AI solutions,” said Forrest Norrod, Executive Vice President and General Manager of AMD’s Data Center Solutions Business Group.

Throughout Dr. Lisa Su’s keynote presentation, AMD’s CEO emphasized their commitment to an annual cadence of product launches that will introduce new advancements in AI performance and memory capabilities each year. This strategic approach indicates a proactive response to the rapidly evolving AI and data center landscapes.

Performance Edge Over Competitors

According to AMD, the MI325X not only surpasses Nvidia’s offerings in memory but also delivers superior compute performance, claiming 1.3 times the peak theoretical FP16 and FP8 compute performance of the H200. AMD’s enhancement of memory and computing capabilities enables customers to run more complex AI models efficiently.

For instance, testing by AMD demonstrated that “customers can achieve 1.3 times better inference performance” when running robust models such as Meta’s Llama-3 70B and Mistral-7B on the MI300X accelerators equipped with the ROCm software stack. This software continues to evolve, facilitating better performance for large language models (LLMs) across various sectors. AMD’s partnership with organizations like Hugging Face, which extensively tests AI models, highlights the growing impact of AMD’s infrastructure on performance capabilities.

“The AMD Instinct MI300X accelerators continue their strong adoption from numerous partners and customers… a direct result of the AMD Instinct MI300X accelerator exceptional performance and value proposition,” stated Brad McCredie, AMD’s Corporate Vice President for Data Center Accelerated Compute.

Looking Ahead: The Future of AMD’s GPU Roadmap

The unveiling of the MI325X also included a glance at AMD’s roadmap for future advancements. AMD is preparing for the launch of the MI350 series powered by CDNA 4 architecture, which is set to debut in 2025. This architecture promises substantial improvements in AI inference performance, projected to offer a staggering 35 times improvement over the previous generation MI300 series. This leap indicates AMD’s focus on enhancing machine learning capacity to tackle increasingly complex models.

Following the MI350 series, AMD’s plans also include an MI400 series anticipated for 2026, built on the innovative CDNA “Next” architecture, which will further advance the company’s GPU capabilities in the AI domain.

“AMD continues to deliver on our roadmap, offering customers the performance they need and the choice they want,” highlighted Dr. Lisa Su during her press conference, reinforcing the company’s commitment to providing tailored AI infrastructure solutions.

Strategic Collaborations and Industry Impact

The recent announcements underscore AMD’s strong relationships with key players in the industry, who are already utilizing the MI300X accelerators in various applications. Noteworthy collaborations include:

- Microsoft Azure employs the MI300X for its Azure OpenAI services.

- Dell Technologies integrates MI300X into its enterprise-focused PowerEdge XE9680 servers.

- Lenovo utilizes MI300X for innovative Hybrid AI solutions with their ThinkSystem SR685a V3 servers.

- Hewlett Packard Enterprise uses the accelerators in the HPE Cray XD675, addressing high-end AI workloads.

With increasing demand for AMD’s MI300X accelerators, AMD aims to leverage these partnerships to solidify its market position and further enhance the capabilities of its offerings across multiple industries.

Adapting to Rapid Technological Changes

As the AI and data center markets continue to transform, AMD’s strategies signify a focused effort to keep pace with technological advancements. The MI325X, by integrating HBM3E memory technology, positions AMD well to address the current appetite for enhanced memory and performance in AI applications. This move also signifies a step towards aligning with global trends favoring higher memory capacities necessary for training extensive AI models.

Analysts suggest that AMD’s ability to innovate quickly and respond to market demands will be crucial to its success moving forward. With Nvidia also ramping up efforts to advance its offerings, the competition in the GPU market is likely to intensify, pushing both companies to innovate continuously.

AMD Mi325X vs. NVIDIA H100 and H200

The AMD Mi325X is entering a competitive market dominated by NVIDIA’s H100 and H200 accelerators. Here’s a table comparing the key specifications and features of these three powerful AI accelerators:

| Feature | AMD Mi325X | NVIDIA H100 | NVIDIA H200 |

|---|---|---|---|

| Architecture | AMD CDNA™ 3 | NVIDIA Hopper | NVIDIA Hopper |

| Compute Units | 128 | 144 | 144 |

| Peak FP32 Performance | 47.9 TFLOPS | 60 TFLOPS | 60 TFLOPS |

| Memory Capacity | 32GB HBM3 | 80GB HBM3 | 141GB HBM3e |

| Memory Bandwidth | 1.3 TB/s | 3 TB/s | 4.8 TB/s |

| Interconnect | 4th Gen Infinity Fabric™ | NVLink 4.0 | NVLink 4.0 |

| Form Factor | PCIe® 5.0 x16 | SXM5, PCIe 5.0 x16 | SXM5 |

| Key Advantages | High performance per watt, competitive pricing | Higher peak performance, larger memory capacity (H100), even larger memory capacity (H200) | Largest memory capacity, highest memory bandwidth, optimized for massive AI workloads |

| Target Workloads | AI inference, cloud workloads | AI training, large language models, HPC | AI training, large language models, HPC |

Analysis:

- Performance: Both NVIDIA accelerators have higher peak FP32 performance, potentially making them better suited for AI training and large language models. The Mi325X, however, focuses on delivering strong performance per watt for energy-efficient inference.

- Memory: The H200 has a clear advantage with its massive memory capacity and bandwidth, surpassing even the H100. This is crucial for large datasets and complex AI models. The Mi325X, while offering respectable memory specs, might be less suitable for extremely memory-intensive scenarios.

- Interconnect: NVLink 4.0 on the NVIDIA GPUs provides higher bandwidth and lower latency compared to AMD’s Infinity Fabric™, especially beneficial in multi-GPU setups.

- Pricing and Availability: AMD is likely to position the Mi325X as a more cost-effective alternative to both NVIDIA options. This could make it attractive for budget-conscious organizations. However, NVIDIA’s established market position and software ecosystem give it a competitive edge.

Bottom Line

The AMD Mi325X offers a compelling alternative in the AI accelerator market. While the H100 and H200 offer peak performance and memory advantages, the Mi325X focuses on performance per watt and affordability. The best choice depends on the specific needs and budget of the user.