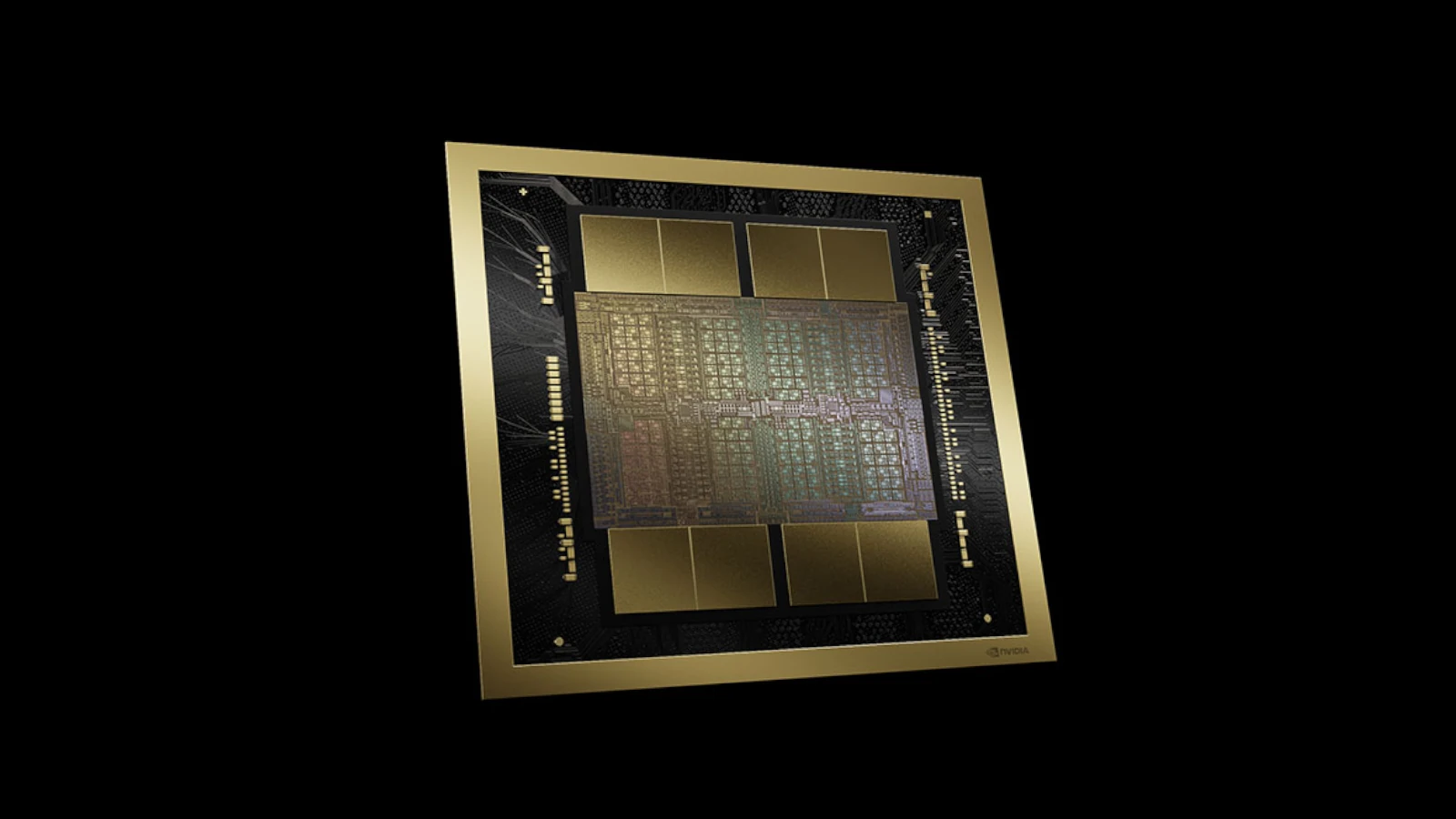

Nvidia is a leading company in AI and they’re known for its powerful and efficient GPUs for data centers. The Hopper and Grace Hopper superchips previously made important contributions and are now being replaced by Blackwell, which is designed to be the faster and more capable next-gen chip. Reports say Blackwell could be up to four times quicker than its predecessor. It offers more features, flexibility, and transistors to handle complex AI tasks.

This improvement is expected to significantly impact the industry, creating new applications and enhancing existing systems. Both Nvidia Blackwell and Nvidia Hopper are GPU architectures aimed at high-performance computing, especially in AI. While Hopper was a big step forward, Blackwell adds key advancements. Nvidia Blackwell marks an important progress in GPU technology, especially for AI and large language model applications. It builds upon Hopper’s strengths, providing even greater performance and efficiency for tough computing challenges.

Nvidia’s Blackwell graphics processing unit (GPU) microarchitecture was officially announced at Nvidia’s GTC 2024 keynote on March 18, 2024. However, Nvidia expects to ship several billion dollars’ worth of Blackwell GPUs in the fourth quarter of 2024.

| Details | |

|---|---|

| Features | Between 2.5x and 5x higher performance and more than twice the memory capacity and bandwidth of the H100-class devices it replaces |

| Architecture | The most complex architecture ever built for AI |

| Availability | Microsoft announced Blackwell GPUs will be available in Azure in early 2025. Google and Amazon have announced the availability of Blackwell systems in the cloud but have not announced launch dates. |

Blackwell is named after statistician and mathematician David Blackwell. Here’s a breakdown of their differences.

Blackwell vs Hopper

Nvidia Blackwell

- Architecture: Nvidia’s latest GPU architecture, succeeding Hopper.

- Focus: Designed for accelerated computing and generative AI, particularly large language models (LLMs).

- Key Improvements:

- Increased Performance: Offers significantly higher performance for AI training and inference compared to Hopper.

- Larger Memory: Features more memory capacity and bandwidth with HBM3e memory.

- Enhanced Efficiency: Includes architectural improvements for better energy efficiency.

- Improved Security: Advanced confidential computing capabilities to protect AI models and data.

- Dedicated Decompression Engine: Accelerates database queries for data analytics.

Nvidia Hopper

- Architecture: Nvidia’s previous-generation GPU architecture, launched in 2022.

- Focus: Also aimed at accelerated computing and AI, but with a broader focus on various workloads.

- Key Features:

- Transformer Engine: Specialized cores for accelerating transformer models in AI.

- Dynamic Programming: Improves performance for dynamic programming algorithms.

- High-Bandwidth Memory: Uses HBM3 memory for fast data access.

Here’s a table summarizing the key differences:

| Feature | Nvidia Blackwell | Nvidia Hopper |

|---|---|---|

| Launch Date | 2024 | 2022 |

| Memory | HBM3e | HBM3 |

| Transistor Count | 208 Billion (B200) | 80 Billion (H100) |

| AI Performance | Up to 20 petaflops (B200) | Up to 4 petaflops (H100) |

| Key Focus | Generative AI, LLMs | Accelerated Computing, AI |

| Confidential Computing | Enhanced capabilities | Present, but less advanced |

| Decompression Engine | Dedicated | Not dedicated |

Which is better?

Blackwell is the clear successor with significant improvements in performance, memory, and efficiency. However, Hopper GPUs are still powerful and relevant for various workloads, especially those that don’t require the extreme capabilities of Blackwell.

Comparison Table

| Feature | Nvidia Blackwell | Nvidia Hopper |

|---|---|---|

| Architecture | Next-generation | Previous-generation |

| Performance (AI Training) | Up to 2.5x faster | Baseline |

| Performance (Generative AI) | Up to 25x faster | Baseline |

| Transistors | 208 billion | 80 billion |

| Manufacturing Process | Custom-built TSMC 4NP | TSMC 4N |

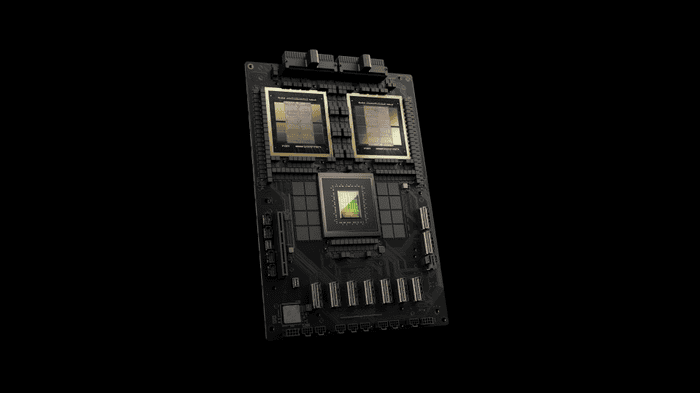

| Core Technology | Dual NVIDIA B200 Tensor Core GPUs | Single H100 Tensor Core GPU |

| Interconnect | 10 TB/s chip-to-chip | 900 GB/s |

| Applications | Focuses on generative AI, data processing, scientific computing | Broader range of AI applications |

| Power Efficiency (Generative AI) | Up to 25x lower cost and energy consumption | Baseline |

| Integration | Tight integration with Grace CPU for efficient data flow | Less integrated CPU architecture |

| Products | DGX SuperPOD with Grace Blackwell Superchips | DGX Systems with H100 GPUs |

Additional Notes:

- Benchmarks for real-world performance differences may vary depending on specific workloads.

- Hopper architecture is still a powerful solution for various AI applications.

- Blackwell offers significant advantages for large language models and emerging AI fields.

Key Takeaways

- Nvidia’s Blackwell GPU represents a significant performance leap over the Hopper series.

- Blackwell’s increased speed and features could greatly impact AI applications and industry standards.

- The enhanced capabilities of Blackwell compared to Hopper highlight Nvidia’s continued innovation in AI technology.

Overview of Nvidia’s Blackwell and Hopper Architectures

Exploring the latest in GPU technology, Nvidia’s Blackwell and Hopper architectures offer a glimpse into the future of accelerated computing. They cater not only to the traditional demands of graphics processing but also to the broadening horizons of AI and machine learning workloads.

Blackwell: Advancements and Features

Blackwell architecture brings significant enhancements over its predecessors. It introduces the Second Generation Transformer Engine which bolsters AI performance by improving on training and inference speeds. Blackwell GPUs, including the Nvidia GB200 Grace Blackwell Superchip, are crafted on the TSMC 4N process, allowing for an increased transistor count and improved energy efficiency. These advancements make Blackwell ideal for large language models (LLMs) and AI applications demanding high memory bandwidth and reliable performance.

Hopper: Innovations and Capabilities

Preceding Blackwell, the Hopper architecture laid the groundwork for a leap in AI processing with its H100 GPU. Hopper’s Tensor Core technology, equipped with the original Transformer Engine, provides accelerated AI calculations utilizing mixed precision formats such as FP8 and FP16. This architecture contributes to the growth of various sectors by delivering accelerated performance in AI workloads while maintaining cost-effectiveness and energy efficiency.

Comparative Analysis of Performance Metrics

A direct comparison between Blackwell and Hopper shows that Blackwell GPUs have ramped up the overall AI performance, surpassing Hopper’s already-impressive capabilities. Both architectures boast improvements in petaFLOPS, a measure of their processing power, though Blackwell accelerates ahead with higher transistor counts and better memory bandwidth, leading to faster and more efficient AI model training.

Connectivity and Integration Across Platforms

Both architectures also emphasize the importance of system integration and connectivity. Blackwell and Hopper support advanced NVLink and CUDA technologies for seamless data sharing and application scaling. Each offers robust networking capabilities; Hopper integrates with Quantum-2 InfiniBand while Blackwell is expected to support the NVLink 5 protocol and the new Quantum-X800 InfiniBand along with enhanced Ethernet networking, ensuring that these architectures can easily adapt to existing and future data center infrastructures.

Impact on Industry and Key Applications

This section addresses Nvidia’s new Blackwell GPUs compared to its Hopper predecessors, focusing on their industry impact and key application areas. It explores how these advancements support AI development, benefit businesses, foster collaborations, and drive Nvidia’s strategic vision within the technological landscape.

Significance in AI Development and Training

Nvidia Blackwell GPUs offer significant enhancements to AI development and training. With increased speed, they make it possible to handle complex language models and support emerging AI fields such as generative AI.

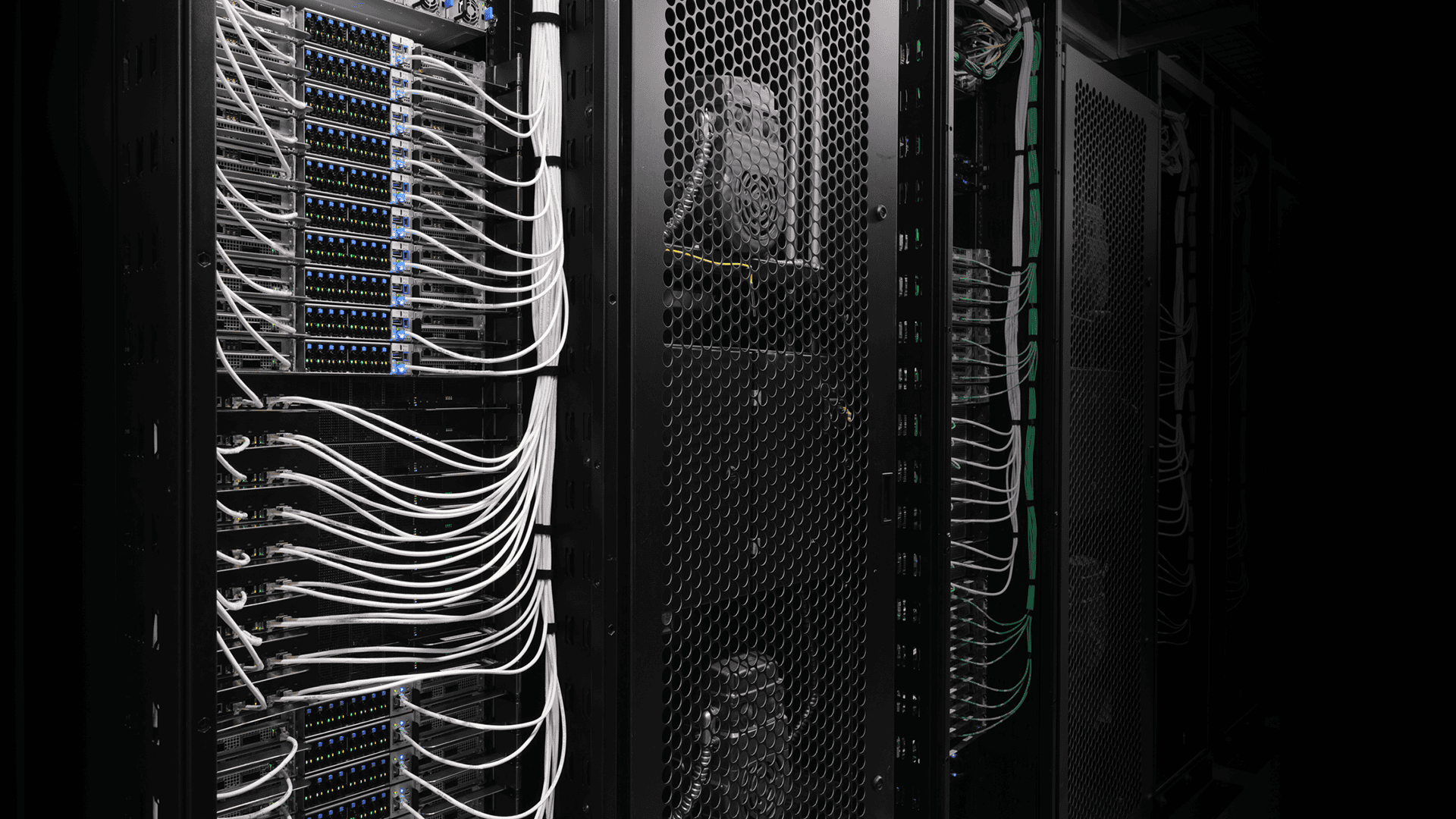

Advantages for Enterprise and Cloud Solutions

Blackwell’s performance advancements are a boon for businesses. These GPUs enhance cloud capabilities, making services like AWS and Google Cloud more efficient, thus helping enterprises grow.

Collaborations and Market Reception

Collaborations are core to Nvidia’s strategy. Partners like Microsoft reap the benefits of Blackwell’s power, with positive market reception boosting Nvidia’s industry standing.

Futuristic Trends and Next Steps for Nvidia

Blackwell sets the stage for Nvidia’s future. It paves the way for sovereign AI and large-scale AI computing, anticipating the industry’s direction and preparing the groundwork for the next innovations.

Nvidia’s Role in Computing Evolution

Nvidia, led by CEO Jensen Huang, continues to shape computing through cutting-edge GPU technologies. Announcements at keynotes, like GTC, emphasize this leadership.

Technological Advances in Memory and Processing

Blackwell introduces significant memory and processing improvements. Advances such as HBM3E memory and an efficient decompression engine make Blackwell stand out.

Economic and Competitive Landscape of GPUs

Nvidia’s GPUs, including Blackwell, shape the competitive landscape. They influence the economics of AI by providing cost-effective solutions for customers.

Celebrating Diversity in Tech: David Harold Blackwell’s Legacy

Nvidia honors the legacy of David Harold Blackwell. Naming their GPU after this influential mathematician reflects a commitment to celebrating diversity in the tech industry.

Strategic Insights: Nvidia’s Vision and Industry Forecast

Through strategic insights, Nvidia’s vision becomes clear. The company aims to lead in GPU technology and forecast industry trends.

Technological Breakthroughs: From GTC to Silicon Innovation

Yearly at GTC, Nvidia unveils silicon innovations. The move from Hopper to Blackwell is an example of these ongoing technological breakthroughs.

Nvidia’s Infrastructure: Scaling with DGX Superpod and More

Nvidia’s infrastructure scales to new heights with Blackwell. Solutions like DGX Superpod benefit from these GPU upgrades, expanding AI’s potential.

AI and Ethics: Nvidia’s Approach to Responsible AI

Nvidia takes a thoughtful approach to AI and ethics. It’s committed to responsible AI growth, maintaining focus on aligning with ethical standards.

Global Outreach: Nvidia’s Partnerships Across Continents

The company’s global outreach is evident in its relationships with various continents. By partnering with a wide range of companies and services, from Oracle to Dell Technologies, Nvidia affirms its international presence.

Innovative Design: Powering the Future of AI with Nvidia Grace and Hopper

Nvidia’s innovative designs combine the Grace CPU with Hopper and now Blackwell GPUs. This powerful alliance advances AI to unprecedented levels.

Frequently Asked Questions

This section answers key queries you might have about Nvidia’s Blackwell and Hopper GPUs by comparing their features, discussing performance, release dates, pricing, and significance in AI advancements.

What are the differences in specifications between the Nvidia Blackwell and Hopper architectures?

Blackwell GPUs, notably the B100 and B200 models, introduce dual-GPU chips which suggest a leap in processing power over the Hopper architecture. Hopper introduced the H100 Tensor Core GPU, focusing on AI and deep learning. Blackwell seems to build upon this foundation with possibly higher performance specs.

How do the Nvidia Blackwell and Hopper GPUs compare in gaming performance?

Currently, specific details on gaming performance for Blackwell are scarce as Nvidia has primarily discussed its enterprise use. However, traditionally, architectural improvements seen in enterprise GPUs find their way into gaming GPUs, potentially offering enhanced performance over previous generations like Hopper.

When is the expected release date for the Nvidia Blackwell?

Based on the information available, Nvidia has teased the release of its next-gen Blackwell GPUs for the year 2024, which would include models like the B100.

What is the anticipated pricing for the Nvidia Blackwell GPU?

As with many enterprise-level GPUs, Nvidia hasn’t made official announcements regarding the pricing of the Blackwell series. Pricing will likely reflect its positioning as a high-performance AI and enterprise solution.

What makes the Nvidia Blackwell chip a significant advancement for AI applications?

The Blackwell architecture is designed to more than double the performance of its predecessor, suggesting a substantial upgrade for AI applications. The B100 AI GPUs promise significant performance improvements, which would be essential in handling complex AI tasks.

Which company is responsible for manufacturing the Blackwell chip?

Nvidia, a leading manufacturer in the GPU market, is at the helm of designing and producing the Blackwell chips. They continue to innovate and push the boundaries of GPU technology with each new release.