Text-to-image models have revolutionized digital art creation. These AI-powered tools transform written descriptions into vivid visual images, opening new possibilities for artists, designers, and content creators. The best text-to-image models produce high-quality, photorealistic images from natural language prompts.

The field of AI image generation is rapidly evolving. New models and improvements emerge frequently, enhancing image quality, accuracy, and creative potential. Users can now generate unique visuals for various applications, from social media content to professional design projects, with just a few words.

Top Text-to-Image AI Choices

Understanding Text-to-Image AI

Text-to-image AI uses words to create pictures. You type a description, and the AI makes an image. This technology has gotten much better recently. Now, these programs can make very realistic and creative images. They are used for art, design, and many other things.

Leading Text-to-Image Models

Here are some of the best text-to-image models available:

- Recraft V3: This model is known for making high-quality images. It follows your instructions very well.

- FLUX1.1 [pro]: This model also makes great images. It can make many different types of pictures. It is good at understanding complex instructions.

- Ideogram v2: This model is very good at putting text into images. It can also edit existing images using text.

- Stable Diffusion XL (SDXL): This is a popular model that is free to use. It makes high-resolution images with good detail. Many people use it and make tools for it.

- DALL-E 3: This model is known for understanding language well. It makes very creative images. It works well with ChatGPT.

What to Look for in a Model

When choosing a text-to-image model, think about these things:

- Image Quality: How good do the pictures look? Are they realistic?

- Following Instructions: Does the model make what you ask for?

- Speed: How fast does it make images?

- Cost: Some models are free, others cost money.

- Extra Features: Does it have tools to edit images?

- Ease of Use: Is it easy to use the model?

Comparing the Models

| Rank | Model | Best For | Things to Know | How to Use |

|---|---|---|---|---|

| 1 | Recraft V3 | High-quality images, accurate results | May have limited access options | Recraft AI platform |

| 2 | FLUX1.1 [pro] | Great images, many styles | Access through different services, varying prices | APIs like Replicate |

| 3 | Ideogram v2 | Text in images, image editing | Can be slower than other models | Ideogram platform |

| 4 | Stable Diffusion XL | High resolution, free use | Needs some technical knowledge | Many websites and programs |

| 5 | DALL-E 3 | Creative images, easy to use | Can be more expensive | OpenAI API, ChatGPT Plus |

Choosing the Right Model

The best model depends on what you need. If you want easy use and creative images, DALL-E 3 is good. If you want free high-resolution images, use Stable Diffusion XL. If you want top image quality and accurate results, try Recraft V3 or FLUX1.1.

Using AI for Image Editing

Besides making new images, AI can also edit old ones. Some programs use AI to remove things from photos. Others can change the style of a picture. This is a growing area of AI. It gives people new ways to work with images.

A Look At The Best Ai Text to Image Generation Models

1) Recraft V3

Recraft V3 is a leading text-to-image model developed by Recraft AI. It generates highly detailed and aesthetically pleasing images based on text prompts.

The model excels at achieving high levels of photorealism and artistic styles. It can create images that look like photographs, paintings, or other forms of art, depending on the prompt.

Recraft V3 emphasizes strong prompt adherence and control over the generated image. Users can use detailed prompts and parameters to guide the model and achieve specific visual results.

The system produces images with excellent composition, lighting, and detail. Its capabilities allow users to create professional-quality visuals for various applications, including marketing, design, and art.

Recraft AI continues to update and improve Recraft V3. They focus on enhancing image quality, prompt understanding, and user experience.

Experts recognize Recraft V3 for its strong performance in recent text-to-image benchmarks. Its ability to create high-quality images that closely match prompts sets it apart.

The model’s capabilities are constantly being expanded with new features and improvements. Users are exploring its potential for diverse creative projects.

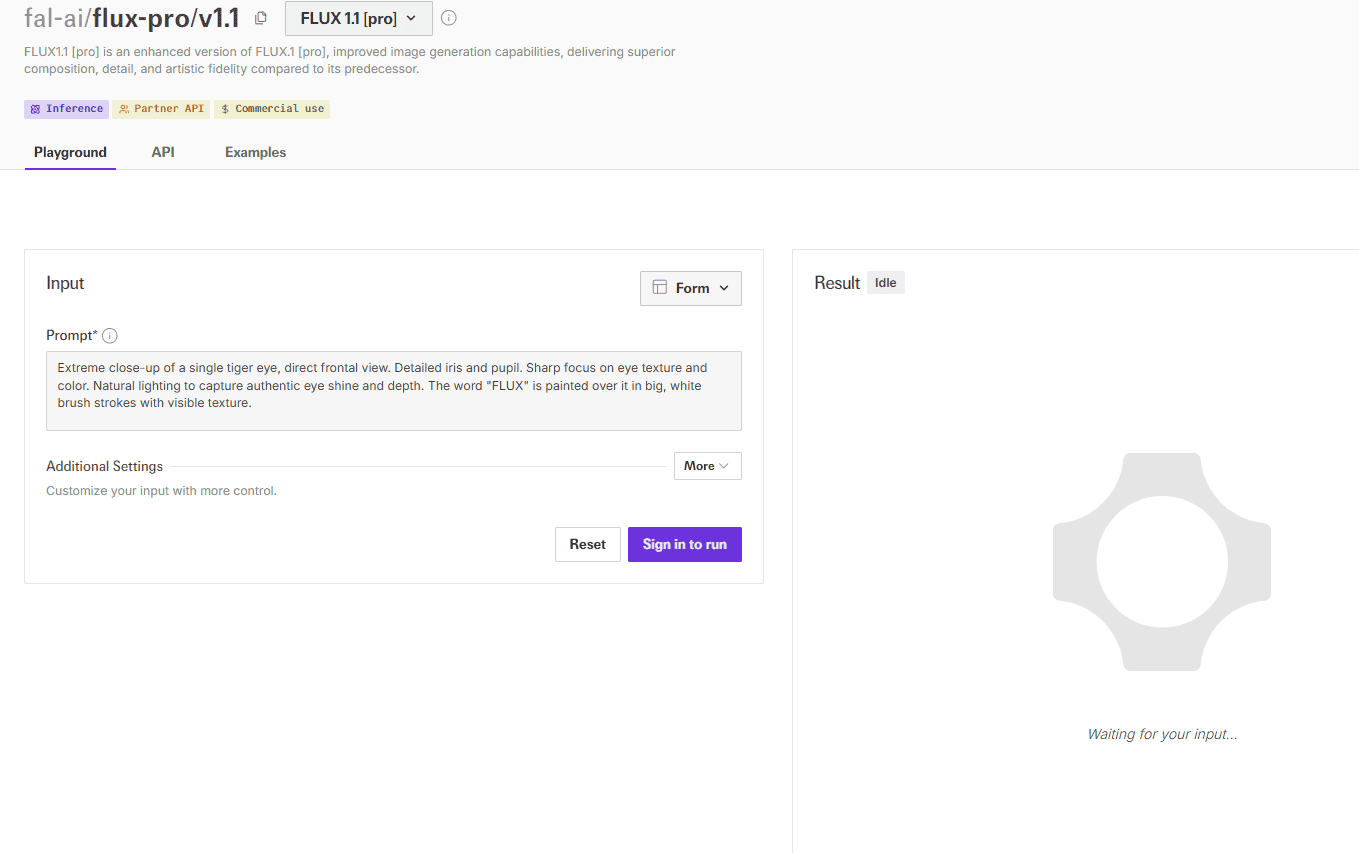

2) FLUX1.1 [pro]

FLUX1.1 [pro] is a high-performing text-to-image model developed by Black Forest Labs. It generates diverse and detailed images from text descriptions, often ranking highly in quality comparisons.

The model excels at generating a wide range of image styles and subjects. From photorealistic scenes to abstract art, FLUX1.1 [pro] demonstrates impressive versatility.

FLUX1.1 [pro] is designed for integration into various applications and workflows. It’s accessible through APIs like Replicate, Together.ai, and fal.ai, offering flexibility for developers and users.

The system produces images with good detail and composition. Its ability to handle complex prompts makes it suitable for advanced image generation tasks.

Black Forest Labs continues to refine and improve FLUX1.1 [pro]. They focus on enhancing its image quality, speed, and overall performance.

Experts often cite FLUX1.1 [pro] for its strong performance in benchmarks and its ability to generate diverse and high-quality outputs. Its API accessibility makes it a popular choice for developers.

The model’s capabilities are being explored for various uses, including creative content generation, design, and research. Its continuous development promises further advancements in its capabilities.

3) Ideogram v2

Ideogram v2 is a text-to-image model known for its exceptional ability to generate images containing realistic and legible text. It also offers powerful inpainting capabilities for editing existing images.

The model excels at accurately rendering text within images, a significant challenge for many other text-to-image models. It can create logos, signs, and other graphics with embedded text that appears natural and clear.

Ideogram v2 provides robust inpainting features, allowing users to edit existing images by providing text prompts. This enables precise control over image modifications and creative manipulations.

The system produces images with good overall quality and coherence. Its focus on text rendering and inpainting makes it particularly useful for design and creative applications.

The developers of Ideogram v2 are actively working on improvements and new features. They are focused on enhancing image quality, speed, and the accuracy of text generation and inpainting.

Experts recognize Ideogram v2 for its unique strengths in text rendering and image editing. Its inpainting capabilities and ability to generate realistic text within images set it apart from other models.

The model’s capabilities are being explored for various applications, including graphic design, marketing materials, and content creation. Its ongoing development suggests further advancements in its text and image manipulation abilities.

4) Stable Diffusion XL

Stable Diffusion is a popular open-source text-to-image model. It uses latent diffusion techniques to generate high-quality images from text descriptions. The model is relatively lightweight, allowing it to run on consumer GPUs.

Stable Diffusion offers several versions. Version 1.5 is widely used and produces good results. The newer SDXL (Stable Diffusion XL) model offers improved image quality and detail.

One advantage of Stable Diffusion is its extensive ecosystem. Many developers have created custom models and tools based on it. This allows users to find specialized versions for specific image styles or subjects.

Stable Diffusion can be run locally on a user’s own computer. This provides more control and privacy compared to cloud-based services. However, it requires some technical knowledge to set up and use effectively.

The model excels at creating artistic and imaginative images. It can generate a wide range of styles, from photorealistic to abstract. Stable Diffusion is particularly good at landscapes, characters, and conceptual art.

Some users find Stable Diffusion results less consistent than other models. Image quality can vary depending on the prompts used. Getting the best results often requires experimentation and prompt engineering skills.

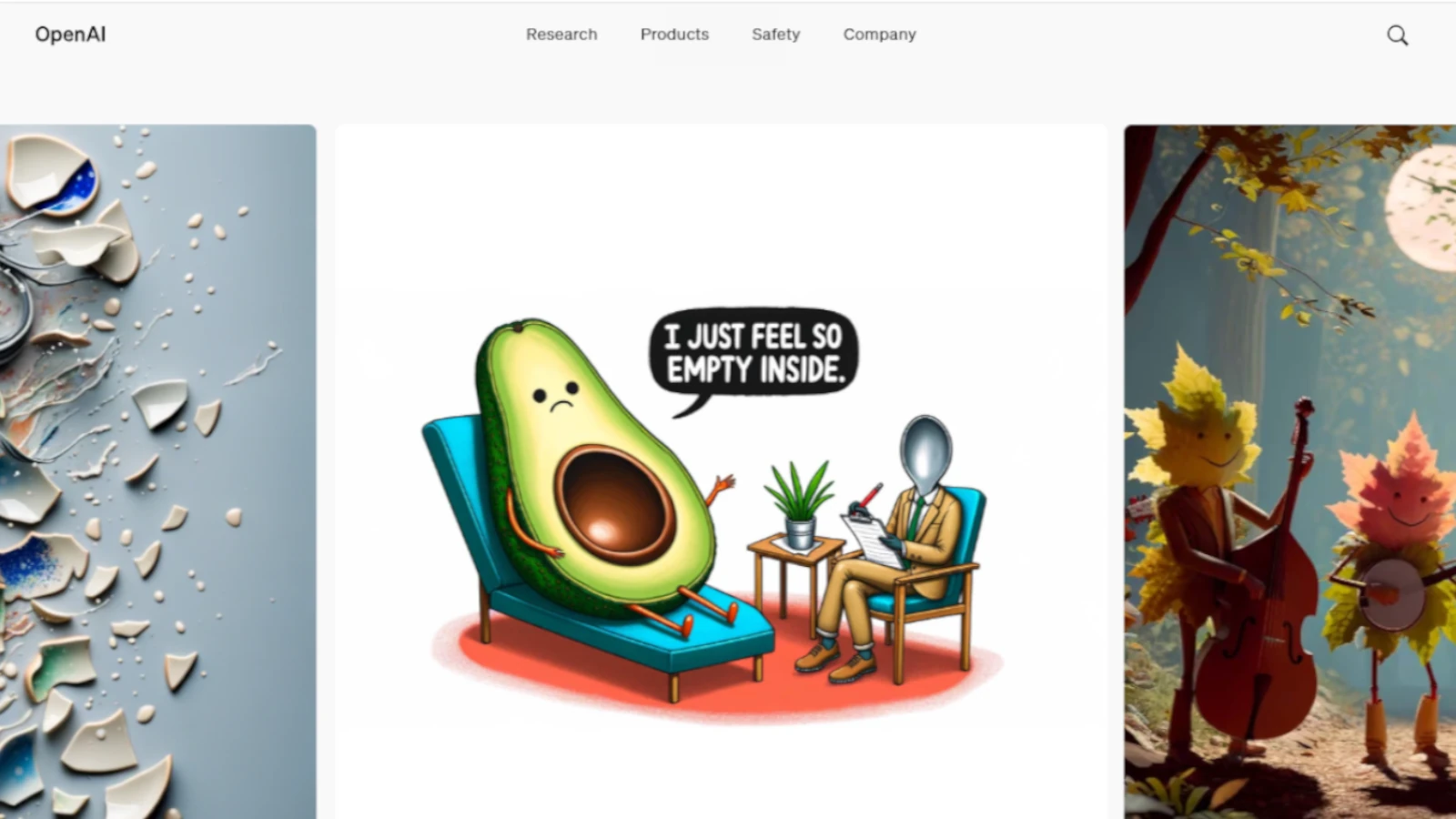

5) DALL-E 3

DALL-E 3 is OpenAI’s latest text-to-image model. It generates high-quality images from textual descriptions with improved accuracy.

The model excels at following prompts precisely. It captures more details than previous versions, creating images that closely match user inputs.

DALL-E 3 offers enhanced safety features. It declines requests for public figures by name and addresses biases in visual representation.

The system produces vivid, realistic images across various domains. Its versatility allows users to create diverse visual content from written ideas.

OpenAI released DALL-E 3 in October 2023. It builds on the successes of earlier iterations while pushing the boundaries of AI image generation.

Experts consider DALL-E 3 a leader in text-to-image technology. Its ability to understand nuanced prompts sets it apart from competitors.

The model’s capabilities continue to expand. Users are still discovering new applications and pushing the limits of what DALL-E 3 can create.

6) Midjourney

Midjourney stands out as a leading text-to-image model in the AI art generation space. It consistently produces high-quality, visually striking images across a wide range of styles and subjects.

The latest version, Midjourney v6.1, boasts an impressive Quality ELO score of 1098.2. This places it near the top of the leaderboard among text-to-image models.

Midjourney excels in creating detailed, aesthetically pleasing compositions. It demonstrates a strong understanding of artistic principles, including color theory, composition, and lighting.

Users praise Midjourney for its ability to interpret complex prompts accurately. The model can generate images that closely match the given text descriptions, often surpassing user expectations.

Midjourney’s strengths include realistic renderings, fantastical scenes, and abstract concepts. It handles various art styles with ease, from photorealistic portraits to surrealist landscapes.

The model receives regular updates, improving its capabilities and expanding its repertoire of styles and techniques. This commitment to continuous improvement keeps Midjourney at the forefront of AI image generation technology.

7) Adobe Firefly

Adobe Firefly is a powerful text-to-image AI model. It generates high-quality images from simple text prompts. The model comes in two versions: Firefly Image 2 and Firefly Image 3.

Users can access Firefly through Adobe’s creative apps. These include Photoshop, Lightroom, and Illustrator. A web app version is also available for broader accessibility.

Firefly offers free AI-generated images online. This feature makes it attractive for users on a budget. The model’s ability to create custom images sets it apart from competitors.

Organizations can train custom models using Firefly Image 3. This allows for tailored image generation. Users can share and download their creations easily.

Firefly integrates seamlessly with Adobe’s ecosystem. This integration enhances its appeal to existing Adobe customers. The model’s versatility makes it suitable for various creative projects.

8) Runway ML Gen-2

Runway ML Gen-2 is a text-to-video AI model that creates short video clips from text prompts. It can generate videos in various styles and genres based on user input. Gen-2 also supports image-to-video and video-to-video functionalities.

The model excels at producing high-quality, visually appealing videos. It can create diverse content ranging from realistic scenes to abstract animations. Gen-2’s ability to understand and interpret complex text prompts sets it apart from other video generation tools.

Users can fine-tune their results using advanced settings. These include adjusting prompt weight, style, and mood. The prompt weight feature allows users to control how closely the generated video adheres to the text input.

Gen-2 builds upon previous text-to-image models by predicting motion from starting images. This approach enables the creation of coherent video sequences. The technology shows promise for filmmakers, advertisers, and content creators seeking to quickly produce visual content.

While Gen-2 offers impressive capabilities, users should be aware of potential limitations. As with many AI models, results may vary depending on the complexity of the prompt and desired output.

9) Artbreeder

Artbreeder is a powerful text-to-image model that combines AI and user creativity. It allows users to generate unique images by blending existing ones or using text prompts.

The platform offers various tools for image creation. Its Composer feature mixes images and text to produce new visuals. The Collager tool lets users build images from shapes, text, and other images.

Artbreeder has gained significant popularity. It boasts 10 million users and has generated over 250 million images. This wide user base contributes to a diverse range of artistic styles and creations.

One of Artbreeder’s strengths is its collaborative nature. Users can work together on image projects, sharing ideas and building upon each other’s work. This fosters a community-driven approach to AI art creation.

The platform uses a genetic blending approach for image modification. Users can alter facial features, landscapes, and other elements to create custom images easily. This flexibility allows for a wide range of artistic expression.

Artbreeder offers both free and paid options. The free tier provides basic features, while paid plans starting at $19 per month unlock enhanced capabilities. This tiered approach makes AI art creation accessible to both casual users and serious artists.

10) DeepArt

DeepArt is a text-to-image AI model that transforms written descriptions into unique visual artwork. It uses advanced neural networks to interpret text prompts and generate corresponding images.

Users can input detailed descriptions or simple phrases to create custom digital art pieces. DeepArt excels at producing abstract and stylized images, often with a painterly quality.

The model offers a range of artistic styles, from impressionism to cubism. This versatility allows users to experiment with different visual aesthetics based on their text inputs.

DeepArt’s strength lies in its ability to capture mood and atmosphere. It interprets emotional cues from text and translates them into color palettes and compositions.

One limitation of DeepArt is its focus on artistic renderings rather than photorealistic images. It may not be the best choice for users seeking lifelike depictions.

The platform provides a user-friendly interface, making it accessible to both artists and non-artists. It serves as a creative tool for inspiration, concept art, and digital content creation.

11) NightCafe

NightCafe stands out as a versatile AI image generation platform. It offers users access to multiple text-to-image models, including DALL-E 3, Stable Diffusion XL, and Ideogram 2.0.

The platform allows creators to choose their preferred model for each project. Some models are free to use, while others require payment. This flexibility caters to various user needs and preferences.

NightCafe’s integration of Ideogram 2.0 in August 2023 marked a significant update. This model, developed by former Google engineers, enhances the platform’s text-to-image capabilities.

Users can create a wide range of AI-generated images on NightCafe. The platform supports the creation of realistic, artistic, and imaginative visuals based on text descriptions.

NightCafe also features AI image challenges. These contests allow participants to win credits, adding an engaging element to the platform. The minimum daily prize is 5 credits.

For those interested in selling AI-generated images, NightCafe provides information on stock photo agencies that accept such content. This feature may appeal to users looking to monetize their creations.

12) DeepAI Text to Image

DeepAI offers a user-friendly text-to-image generation service. Users can create images by entering descriptive text prompts. The AI understands these prompts and produces corresponding visuals.

The tool generates high-resolution images suitable for various purposes. These include web content, print materials, and social media posts. No technical skills are required to use the service.

DeepAI’s image generator employs advanced artificial intelligence algorithms. These algorithms bridge the gap between language and visual representation. The system aims to create images with precision based on the input text.

Users can expect a straightforward process when using DeepAI. They simply type their desired image description and select preferences. The AI then processes this information to create the image.

DeepAI’s service competes with other text-to-image tools in the market. It focuses on simplicity and elegance in its image creation process. The tool strives to capture the essence of users’ prompts in its visual outputs.

13) Craiyon

Craiyon, formerly known as DALL-E mini, is a free AI image generator. It creates images based on text prompts entered by users.

Craiyon uses its own AI model to generate images. The tool is accessible through a web interface, making it easy for users to start creating AI art quickly.

One advantage of Craiyon is its simplicity. Users can generate images without needing advanced technical knowledge. The tool produces multiple image options for each prompt, giving users variety.

Craiyon’s image quality is generally lower than some paid alternatives. The generated images often have a distinct, somewhat abstract style. This can be a creative feature for some projects, but may not suit all needs.

The tool is popular for casual use and experimentation with AI art. Its free access makes it a good starting point for those new to text-to-image generation.

Craiyon does not offer advanced editing features. Users looking for more control over their generated images may prefer other options. Despite this limitation, Craiyon remains a useful tool for quick, fun image creation.

Understanding Text-to-Image Models

Text-to-image models use artificial intelligence to create visual content from written descriptions. These advanced systems blend natural language processing with computer vision to generate images that match text prompts.

How Text-to-Image Models Work

Text-to-image models use deep learning algorithms to analyze text inputs and generate corresponding images. The process begins with the model encoding the text prompt into a numerical representation. This encoded information then guides the image generation process.

The models typically use large datasets of image-text pairs for training. They learn to associate textual descriptions with visual features. Advanced architectures like GANs (Generative Adversarial Networks) or diffusion models are often employed.

During image creation, the model progressively refines a random noise input. It adjusts pixel values based on the encoded text prompt. This iterative process continues until a coherent image emerges that matches the description.

Applications of Text-to-Image Models

Text-to-image models have diverse applications across industries:

- Creative Fields: Artists and designers use these tools for concept art, storyboarding, and brainstorming visual ideas.

- Marketing: Advertisers generate custom visuals for campaigns quickly and cost-effectively.

- E-commerce: Online retailers create product images from descriptions, enhancing catalog variety.

- Education: Teachers illustrate complex concepts with tailored visuals.

- Entertainment: Game developers and filmmakers prototype scenes and characters.

These models also aid in accessibility, helping visually impaired individuals better understand textual content through generated images. As the technology improves, its potential uses continue to expand across various domains.

Factors Influencing Model Performance

Text-to-image models rely on complex interactions between data and algorithms. These elements shape the quality and capabilities of generated images.

Data Quality

High-quality datasets are crucial for text-to-image models. Diverse and accurately labeled images help models learn varied visual concepts. Large datasets expose models to more examples, improving their ability to generate complex scenes.

Clean data is essential. Mislabeled or low-quality images can lead to errors in generated content. Models trained on biased datasets may produce skewed results. Ethical considerations in data collection are important to avoid reinforcing stereotypes.

Recent models use web-scale datasets with billions of image-text pairs. This vast amount of data allows for better understanding of language and visual concepts.

Algorithmic Advances

Innovations in model architecture drive performance improvements. Transformer-based models like DALL-E 3 and Imagen 2 show enhanced text understanding and image generation.

Diffusion models, used in Stable Diffusion, offer high-quality image synthesis. These models gradually refine noise into coherent images, capturing fine details.

Attention mechanisms help models focus on relevant parts of text prompts. This improves the alignment between generated images and user instructions.

Multi-modal learning techniques allow models to better connect text and visual information. This leads to more accurate interpretations of complex prompts.

Optimization methods like efficient training and inference techniques enable faster image generation. This improves user experience and allows for real-time applications.

Challenges in Developing Text-to-Image Models

Text-to-image models face significant hurdles in their development and implementation. These challenges span ethical considerations and technical limitations, impacting their effectiveness and responsible use.

Ethical Considerations

Text-to-image models raise important ethical questions. Copyright infringement is a major concern, as these models may generate images that resemble copyrighted works. This creates legal risks for developers and users alike.

Bias and fairness issues also plague these models. They often reflect societal biases present in their training data, potentially producing discriminatory or stereotypical images. This can reinforce harmful prejudices and exclude underrepresented groups.

Misuse for creating deepfakes or misleading content is another ethical challenge. Bad actors could exploit these models to spread misinformation or manipulate public opinion through fake images.

Transparency and accountability are crucial. Users should know when an image is AI-generated, but current models lack built-in disclosure mechanisms.

Technical Limitations

Text-to-image models face several technical constraints. Generating high-quality, photorealistic images remains difficult, especially for complex scenes or specific details requested in prompts.

Consistency across multiple generated images is challenging. Models struggle to maintain coherent styles, characters, or settings when creating series of related images.

Text comprehension is another hurdle. Models sometimes misinterpret or ignore parts of prompts, leading to inaccurate or incomplete visual representations.

Computational requirements pose practical limitations. Advanced text-to-image models need significant processing power and memory, restricting their use on consumer devices.

Control over specific image elements is limited. Users often can’t fine-tune individual aspects of generated images, such as object placement or lighting conditions.

Frequently Asked Questions

Text-to-image AI models have rapidly advanced, offering various options for creators and businesses. Users often seek information on top-rated models, quality comparisons, and accessibility across different platforms.

What are the top-rated text-to-image models currently available?

DALL-E 3, Midjourney, and Stable Diffusion rank among the highest-rated text-to-image models in 2024. DALL-E 3 excels in photorealism and consistency. Midjourney offers exceptional detail across artistic styles. Stable Diffusion provides a balance of quality and customization options.

Which text-to-image AI generators offer the highest quality output?

DALL-E 3 and Midjourney consistently produce high-quality images. Adobe Firefly specializes in commercial-ready visuals. Runway ML Gen-2 stands out for its video generation capabilities alongside still images.

How do the capabilities of free text-to-image models compare to paid versions?

Free models often have limitations in image resolution, generation speed, and advanced features. Paid versions typically offer higher-quality outputs, faster processing, and more customization options. Some models like Stable Diffusion provide both free and paid tiers with varying capabilities.

Are there professional-grade open source text-to-image models accessible to the public?

Stable Diffusion stands out as a professional-grade open source model. It allows users to run the model locally or access it through various platforms. This accessibility enables developers and researchers to build upon and customize the technology.

What platforms support the most advanced text-to-image model applications for iOS?

Several platforms offer iOS applications for text-to-image generation. DALL-E 3 is accessible through the ChatGPT app. Midjourney provides web-based access optimized for mobile use. Adobe Firefly integrates with various Adobe mobile apps for on-the-go creation.

Can you recommend text-to-image AI generators that are well regarded by the online community?

Midjourney has gained popularity for its artistic outputs and active user community. Stable Diffusion is praised for its open-source nature and customization potential. DALL-E 3 receives positive feedback for its ability to understand and execute complex prompts accurately.