Google has officially launched Gemini 2.0, a groundbreaking advancement in artificial intelligence. This version features agentic capabilities, allowing the AI to perform complex tasks on behalf of users, while also introducing significant improvements in performance, image generation, and audio processing. Gemini 2.0 boasts enhanced reasoning and coding abilities, along with a multimodal understanding that enables it to process various types of data, including text, images, and code.

The goal of Gemini 2.0 is to enhance Google products such as Search and Bard, providing users with more accurate results and helpful responses. This article explores the key features of Gemini 2.0, compares it to other large language models (LLMs), and discusses its potential impact on various applications.

Exploring the Power of Gemini 2.0

What is Gemini 2.0?

Google’s Gemini 2.0 is a major update to its large language model (LLM). It’s designed to be more powerful and capable than its predecessor. Gemini 2.0 aims to improve in several key areas, including reasoning, coding, and multimodal understanding (handling different types of information like text, images, and code). This new model is expected to power many Google products and services, from search to Bard.

Features and Improvements

Gemini 2.0 brings several important enhancements:

- Advanced Reasoning: Gemini 2.0 is designed to handle complex problems and logic puzzles more effectively. This means better performance in tasks that require critical thinking.

- Improved Coding Skills: The model can write, debug, and explain code in many programming languages with greater accuracy. This is helpful for developers and anyone working with code.

- Multimodal Capabilities: Gemini 2.0 can understand and connect different types of data, like text and images. This opens up new possibilities for how we interact with information.

How Does Gemini 2.0 Compare to Other LLMs?

The field of LLMs is competitive. Many companies are developing advanced models. Gemini 2.0 aims to stand out with its focus on multimodal understanding and advanced reasoning. It’s designed to be a general-purpose model, meaning it can handle a wide range of tasks. Here’s a quick comparison:

| Feature | Gemini 2.0 | Other LLMs |

|---|---|---|

| Multimodal Understanding | Strong | Varies |

| Reasoning Ability | Advanced | Varies |

| Coding Skills | Improved | Varies |

| General Purpose | Yes | Varies |

What Can You Do With Gemini 2.0?

Gemini 2.0 has many potential uses:

- Enhanced Search: Better understanding of user queries for more accurate results.

- Improved Bard: More helpful and informative responses in Google’s AI chatbot.

- New AI Tools: Development of new applications that can understand and process different types of information.

Questions People Ask About Gemini 2.0

Is Gemini 2.0 available to everyone?

Gemini 2.0 is being rolled out in Google products and services. Check for updates to your favorite Google tools.

How is Gemini 2.0 different from previous models?

Gemini 2.0 is designed with enhanced reasoning, improved coding skills, and stronger multimodal capabilities.

What are the benefits of multimodal AI?

Multimodal AI can connect different types of data, leading to a more complete understanding of information.

The Future of Large Language Models

LLMs like Gemini 2.0 are rapidly changing how we interact with technology. They can help us find information, generate creative content, and automate tasks. As these models continue to improve, we can expect even more powerful and useful applications in the future.

Understanding the Transformer Architecture

Large language models like Gemini 2.0 are built using a technology called the “transformer” architecture. This architecture is very effective at processing sequential data, like text. Transformers use a mechanism called “attention,” which allows the model to focus on the most relevant parts of the input when making predictions. This is what allows LLMs to understand context and relationships between words in a sentence. The transformer architecture has been a key factor in the recent advancements in natural language processing, and it continues to be a focus of research and development in the field.

Short Summary:

- Google’s Gemini 2.0 introduces agentic AI systems capable of understanding context and planning ahead.

- The new model includes features like real-time image generation and multilingual audio processing, aiming to enhance user interactions.

- Projects like Astra and Mariner leverage Gemini 2.0’s capabilities, expanding AI use in everyday tasks and development workflows.

On Wednesday, Google took a considerable step forward in the realm of artificial intelligence with the launch of its Gemini 2.0 family of models, featuring the release of Gemini 2.0 Flash. This experimental version is a vital component of Google’s strategy to remain competitive against industry heavyweights like OpenAI and Anthropic in the ever-evolving landscape of AI technology. Google’s Senior Vice President Tulsee Doshi stated,

“Gemini 2.0 ushers in a new era of agentic AI, blending advanced capabilities with practical applications.”

This upgraded model arrives nearly one year after the initial launch of Gemini, during which AI technology has rapidly escalated from simple query responses to systems that can accommodate more nuanced conversations, increased contextual understanding, and the ability to execute supervised actions on behalf of users. The shift represents significant advancements towards creating AI that can not only assist but also act independently within set parameters.

Technical Innovations and Capabilities

The Gemini 2.0 Flash version boasts significant speed improvements, reportedly operating at twice the pace of its predecessor, making it more efficient than competing models. In a demonstration, Doshi emphasized,

“The enhancements found in Gemini 2.0 are a direct result of our commitment to improving performance without compromising functionality.”

The technical architecture that supports these developments includes the sixth-generation Tensor Processing Unit (TPU), known as Trillium, which underpins the model’s robust performance capabilities.

With the introduction of native image generation and multilingual audio output features, Gemini 2.0 is tailor-made for a modern user base demanding more versatile and responsive AI applications. This advancement allows users to conduct complex tasks, such as generating images on demand or engaging in seamless conversations across languages.

Project Astra: A Universal Assistant

Certainly, some of the most exciting developments within Gemini 2.0 come from Project Astra, an upgraded universal assistant designed to enhance interpersonal interactions. Bibo Xu, Group Product Manager at Google DeepMind, articulated during the launch,

“Project Astra now features a state-of-the-art memory system that allows for contextual retention of conversations over multiple sessions.”

This upgraded memory allows the assistant to recall information and adapt to user needs more effectively, facilitating a more personalized experience.

The project, which has been under testing, demonstrated its ability to maintain extended dialogues and switch between languages on-the-fly, showcasing the potential of AI to bridge communication gaps in an increasingly globalized market. Users can expect that Project Astra will soon integrate major Google tools, such as Search and Maps, significantly enhancing its utility and responsiveness.

Project Mariner: Tailored for Developers

For developers, Google introduced Project Mariner, designed to streamline various web-related tasks. Jaclyn Konzelmann, Director of Product Management at Google Labs, showcased Mariner’s potential, which has demonstrated a remarkable success rate of 83.5% on the WebVoyager benchmark for real-world navigation tasks.

“Mariner represents the future of human-agent interaction and optimizes web browsing through intelligent automation,”

said Konzelmann.

This project has been trialed as a Chrome extension, allowing it to navigate the web autonomously. However, the team is well aware of the risks, emphasizing safety and user oversight as central features of its design. This proactive stance is indicative of Google’s commitment to responsible AI development, ensuring that users maintain control and are protected from various online threats.

Ethical Considerations and Responsible Development

Amid the excitement surrounding Gemini 2.0’s new capabilities, Google also acknowledges the ethical considerations woven into its deployment. Shrestha Basu Mallick, Group Product Manager for the Gemini API, highlighted the company’s dedication to responsible AI innovation, stating,

“Safety and ethical guidelines are at the forefront of our development strategy as we usher in these new technologies.”

Google intends to implement rigorous testing protocols along with user education to mitigate risks associated with autonomous AI systems.

As companies and consumers navigate the complexities of integrating AI into daily life, considerations around academic integrity, user dependency on technology and its impact on critical thinking skills must take precedence. Educational institutions might soon need to rethink curricula that incorporate AI tools, ensuring they bolster rather than hinder intellectual growth. Concerns around academic dishonesty also prompt discussions on how institutions can regulate AI use to promote ethical practices.

Broader Implications for the Industry

The ramifications of Gemini 2.0 extend well beyond Google itself, as the competitive landscape continues to shift. Tech giants like Microsoft, OpenAI, and newly emerging startups are continuously working to advance AI capabilities, further intensifying the race for market supremacy. Google’s integrated AI approach—delivering performance and user-centric solutions—positions it on a path for sustained growth and user adoption.

With Gemini 2.0, Google is not only enhancing its existing portfolio of products but also paving the way for future innovations that could disrupt various sectors—from education to e-commerce. As more users become familiarized with AI-driven assistance, the expectation for seamless, effective interactions will rise, thus pushing the boundaries of what AI technologies can achieve.

Public Reception and Future Prospects

Public reception of Gemini 2.0 is a mixture of optimism and trepidation. Many users are excited about the enhanced capabilities, especially those that can automate mundane tasks, allowing for a greater focus on creative and strategic endeavors. However, alongside the enthusiasm, a sense of caution lingers regarding potential overdependence on these systems.

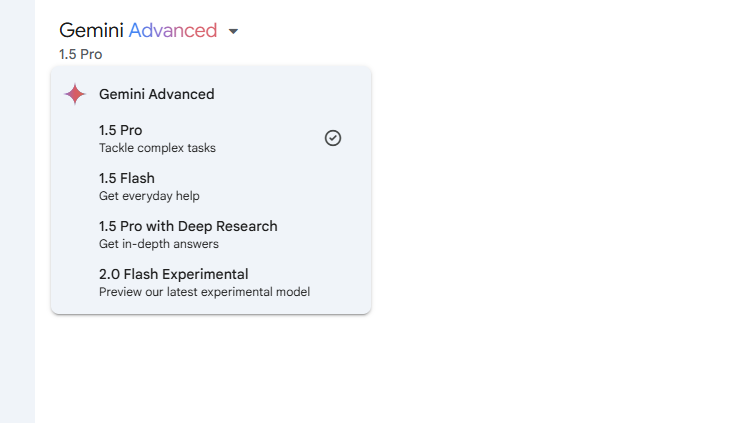

As Google continues to roll out the Gemini family of models and work on enhancing its features, the company recognizes the need for ongoing dialogue with users and stakeholders to address concerns and fine-tune its offerings. With the anticipated full release of Gemini 2.0 Flash in January 2025, users and developers alike are keen to explore the possibilities these interfaces will enable.