When DeepSeek, a rising Chinese AI startup, claimed to have built its R1 model for just $6 million, the industry took notice. If true, this would have been a groundbreaking moment—proof that cutting-edge AI models could be trained at a fraction of the cost shouldered by industry giants like OpenAI and Google DeepMind.

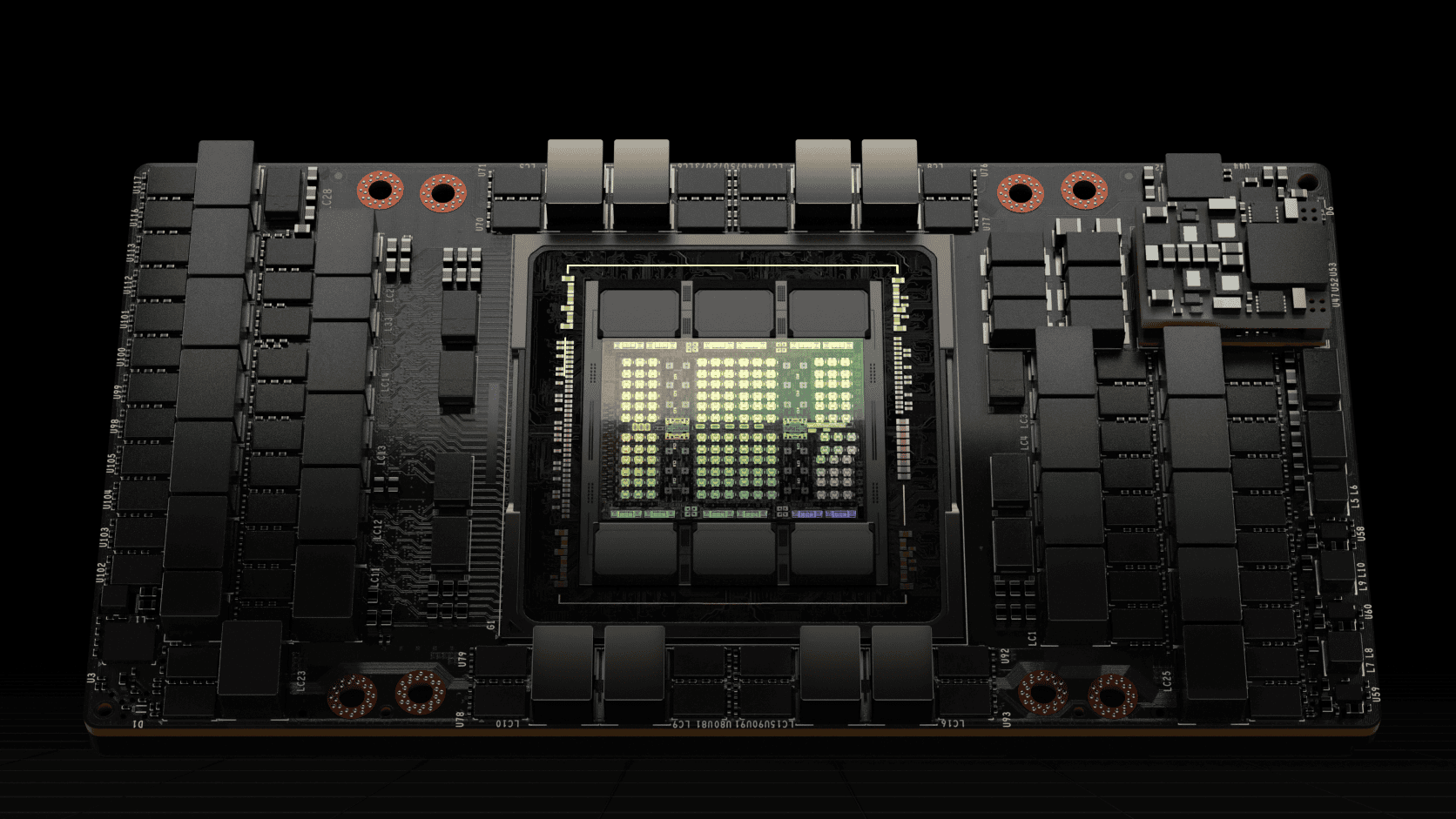

However, a deeper investigation by SemiAnalysis has now exposed a far different reality. The true cost of DeepSeek’s AI ambitions? Over $1.6 billion in capital expenditure, with an estimated 50,000 Nvidia Hopper GPUs powering its operations. This revelation not only shatters the illusion of “cheap” AI but also reshapes our understanding of DeepSeek’s competitive position in the global AI arms race.

The Real Cost of AI: More Than Just Training

The discrepancy between DeepSeek’s reported $6 million figure and the actual $1.6 billion investment stems from a fundamental misunderstanding—or perhaps a strategic obfuscation—of what AI training costs truly entail. The $6 million figure appears to only account for the GPU compute time required for the final training phase of their R1 model.

However, the overall cost of building and maintaining a cutting-edge AI model extends far beyond training runs. The real financial burden includes:

- Massive hardware infrastructure – With 50,000 Nvidia H100 GPUs, DeepSeek’s investment in computational power rivals some of the biggest AI firms globally. Each H100 unit costs between $25,000 and $40,000, putting the total GPU spend in the range of $1.25 billion to $2 billion alone.

- Energy costs – Operating such a large fleet of GPUs requires enormous amounts of electricity. Data centers hosting AI clusters need specialized cooling systems, further driving up operational costs.

- Data acquisition and processing – Training an AI model at scale requires access to high-quality, diverse datasets. Data collection, cleaning, and preparation represent a significant financial and logistical hurdle.

- Engineering talent and research – Hiring top-tier AI researchers, engineers, and infrastructure specialists is another costly endeavor. DeepSeek has been actively recruiting from leading AI firms and universities, adding to its expenditure.

Why This Matters: The New Reality of AI Economics

The exposure of DeepSeek’s true spending underscores a critical reality—advanced AI development is not getting cheaper. While the efficiency of AI models is improving, training state-of-the-art large language models still requires staggering financial and infrastructural commitments.

DeepSeek’s vast compute power signals that it is no mere startup—it’s a serious contender in the AI race, potentially rivaling OpenAI, Google, and Anthropic. The scale of its infrastructure contradicts earlier assumptions that Chinese AI firms were trailing behind their Western counterparts. With such massive backing, DeepSeek could emerge as a dominant force, particularly in China’s growing AI ecosystem, where U.S. tech sanctions have made homegrown solutions even more critical.

The Bigger Picture: What’s Next for DeepSeek?

With its vast GPU resources, DeepSeek is poised to develop increasingly powerful AI models. The question now is: Can it leverage this infrastructure effectively?

- Competing with OpenAI and Google – Access to compute power is crucial, but raw hardware alone does not guarantee success. The effectiveness of DeepSeek’s models will depend on their architecture, training methodologies, and ability to innovate in AI applications.

- Navigating U.S.-China AI tensions – As China ramps up its AI ambitions, U.S. restrictions on advanced semiconductor exports could impact DeepSeek’s long-term strategy. If Nvidia’s supply chain becomes constrained, Chinese firms may need to pivot to domestic alternatives.

- Commercializing AI at scale – DeepSeek must prove that its investment translates into profitable AI applications, whether in enterprise services, cloud AI tools, or consumer-facing products.

Conclusion: No Such Thing as Cheap AI

The DeepSeek saga serves as a wake-up call for those who believed that AI breakthroughs could come at a fraction of the cost. The AI revolution is expensive, and only those with the deepest pockets can afford to play at the highest level.

While DeepSeek’s claims of a $6 million AI model may have initially raised eyebrows, the real story is far more compelling: China is investing heavily in AI, and DeepSeek is positioning itself as a major force in the global AI race.

If DeepSeek can effectively utilize its enormous GPU power, it may soon find itself standing shoulder to shoulder with the world’s biggest AI players. But in the end, success in AI is not just about how much you spend—it’s about what you build.

Unmasking DeepSeek’s AI Investment

The $6 Million Mirage

DeepSeek made waves with its claim of building a powerful AI model for a mere $6 million. This figure seemed impossibly low compared to the billions spent by industry giants. Experts quickly expressed skepticism. The initial number likely only covered a fraction of the actual expenses, perhaps the direct costs of the final training run. It overlooked the massive investment required for research, infrastructure, and personnel.

SemiAnalysis Uncovers the Truth

A report by SemiAnalysis paints a very different picture. Their investigation suggests DeepSeek’s actual spending is closer to $1.6 billion. This colossal sum aligns more with the scale of their AI ambitions and the hardware they’ve acquired.

Hardware Breakdown

SemiAnalysis estimates DeepSeek has amassed a staggering 50,000 Nvidia Hopper GPUs. This includes a mix of H800s, H100s, and H20s. Such a vast collection of high-performance processors doesn’t come cheap. The hardware alone likely cost DeepSeek over a billion dollars.

Operating Expenses Add Up

Beyond the initial hardware outlay, DeepSeek faces ongoing operating costs. SemiAnalysis pegs these at around $944 million. This covers expenses like energy consumption, maintenance, and the salaries of their AI specialists.

Why the Discrepancy Matters

The gap between the reported $6 million and the estimated $1.6 billion is significant. It highlights the complexities of AI development costs. A seemingly small investment can balloon when you factor in all the necessary components. Transparency about these costs is crucial for investors and the public.

DeepSeek’s Strategy

While the $1.6 billion figure may seem less surprising, it doesn’t diminish DeepSeek’s efforts. The company is clearly making a major push in the AI race. They are investing heavily to compete with established players. Their strategy may involve a combination of technical innovation and aggressive hardware acquisition. Whether this bet pays off remains to be seen.

Comparing AI Investments

| Company | Estimated Investment | Key Hardware |

|---|---|---|

| DeepSeek | $1.6 Billion | 50,000 Nvidia Hopper GPUs |

| Other Major Players | Billions | Thousands of High-Performance GPUs |

Key Takeaways

- DeepSeek’s actual AI development costs are 216 times higher than initially claimed

- The company maintains one of the largest GPU fleets in the AI industry with 50,000 units

- Hardware and infrastructure costs dominate AI model development expenses

Analysis of Reported Costs and Investments

Initial claims of a $6 million AI model training cost have sparked significant industry debate and scrutiny, leading to revelations of much higher actual expenses reaching into billions of dollars.

Discrepancy in DeepSeek’s Stated and Actual Expenditures

DeepSeek’s public statement of $6 million in training costs significantly underestimated the true financial scope of their AI development. Research indicates actual costs are approximately $1.6 billion – 266 times higher than the original claim.

The disparity stems from multiple uncounted expenses. These include research and development spanning several years, synthetic data generation, and extensive infrastructure costs.

OpenAI’s ChatGPT-4 training costs of around $100 million provide a useful industry benchmark. This comparison highlights the implausibility of DeepSeek’s initial $6 million figure.

Insights into Technology and Infrastructure Investments

DeepSeek’s AI infrastructure relies heavily on advanced GPU clusters. The company has deployed an estimated 50,000 GPUs, primarily consisting of Nvidia Hopper series processors.

Data center investments form a substantial portion of the total expenditure. These facilities require specialized cooling systems, power management, and redundancy measures.

The hardware configuration includes:

- H100 and H800 GPU arrays

- High-performance networking equipment

- Enterprise-grade storage systems

- Advanced cooling infrastructure

Role of Strategic Partnerships and Industry Support

Tech giants have played crucial roles in supporting DeepSeek’s infrastructure development. AWS provides cloud computing resources, while Nvidia supplies essential GPU hardware.

Strategic partnerships help distribute the capital burden. These collaborations include shared research initiatives and technology exchange programs.

Industry analysts point to similar arrangements at competing firms. Microsoft’s partnership with OpenAI demonstrates the value of strategic alliances in managing AI development costs.

Implications and Future Outlook

The revealed costs of AI development signal major shifts in industry dynamics, technological advancement, and financial planning for AI companies and investors.

Enhancing AI Capabilities and Efficiency

AI training costs require significant optimization to make large-scale model development sustainable. DeepSeek’s infrastructure of 50,000 GPUs demonstrates the massive computing power needed for advanced AI models.

GPU utilization rates and training efficiency must improve to reduce costs. Companies are exploring new architectures like Multi-Head Latent Attention to boost performance while using fewer computational resources.

Model optimization techniques and improved algorithms could help reduce the hardware requirements for future AI development. Innovations in cooling systems and power delivery will be essential as computing demands grow.

Impact on Market and Potential Export Controls

The high costs create significant barriers to entry for new AI companies. Only well-funded organizations can afford the billions needed for infrastructure and development.

Export controls on advanced AI chips may further limit who can develop large language models. This could concentrate AI development among a few major players in specific regions.

The market may shift toward specialized AI models that require less computing power. Companies might focus on specific use cases rather than general-purpose AI to manage costs.

Financial Considerations for Scaling AI Development

Capital expenditure planning must account for substantial hardware investments. Companies need robust funding strategies to support the $500 million to $1.6 billion range for comprehensive AI development.

Key cost factors:

- GPU procurement and maintenance

- Data acquisition and processing

- Research and development

- Technical talent compensation

- Infrastructure support

Strategic partnerships and cloud computing solutions could help distribute costs. Small companies may need to explore alternative approaches like transfer learning or model fine-tuning to remain competitive.

Frequently Asked Questions

DeepSeek’s cost discrepancy reveals significant gaps between reported and actual expenses, highlighting crucial lessons for AI development cost estimation and transparency in the industry.

What factors contributed to the discrepancy in reported costs for DeepSeek’s project?

The $6 million figure only represented a fraction of the pre-training costs. The actual expenses included massive hardware investments, particularly in GPU infrastructure.

The total cost ranged from $500 million to $1.6 billion, with substantial spending on data acquisition, talent recruitment, and operational expenses.

How did the actual number of GPUs used by DeepSeek compare with initial projections?

DeepSeek operates approximately 50,000 Hopper GPUs across multiple data centers. These GPUs serve as the foundation for AI training and research operations.

The scale of GPU deployment significantly exceeded initial public disclosures, representing a major capital investment.

What are the implications of DeepSeek’s higher-than-expected costs on future large-scale AI projects?

The true cost revelation sets more realistic expectations for companies planning similar AI initiatives.

This case demonstrates the substantial capital requirements for developing competitive AI models at scale.

Can the significant cost difference of DeepSeek’s project be attributed to underestimation or unforeseen expenses?

The disparity stems from selective reporting that focused on partial costs rather than total infrastructure investment.

Hardware costs, especially GPUs, proved substantially higher than initially indicated in public statements.

What steps are being taken to improve cost estimation and management for similar AI projects in the future?

Industry analysts now emphasize the importance of comprehensive cost reporting that includes infrastructure, talent, and operational expenses.

Companies are developing more detailed frameworks for calculating total AI project costs.

How will DeepSeek’s actual expenditures influence investor confidence in large-scale AI research and development?

Investors now recognize the true scale of investment needed for competitive AI development.

This revelation helps set more accurate benchmarks for funding requirements in large-scale AI projects.

The case serves as a reference point for venture capital firms evaluating future AI investments.