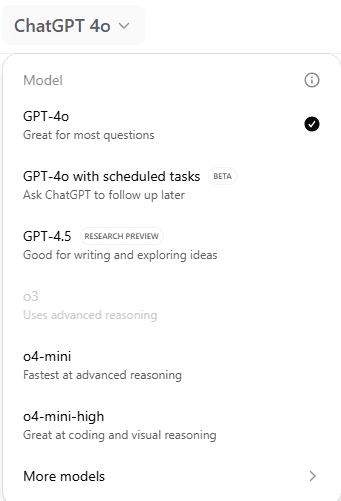

If you’re feeling confused about which ChatGPT model you’re using — whether it’s GPT-4o, GPT-4.1, GPT-4 Turbo, or something like “o3-mini-high” — you’re not alone. OpenAI’s model naming system has become so chaotic that even developers and AI insiders are losing track. And now, the company itself is acknowledging the problem.

OpenAI’s Model Names Are a Mess – And Even Sam Altman Agrees

The Growing Naming Problem

As OpenAI continues to release new AI models with slightly different capabilities and pricing structures, their naming conventions have devolved into an alphabet soup of half-version numbers and vague suffixes like “mini,” “turbo,” “pro,” “nano,” and “o.” Without a consistent or intuitive structure, it’s almost impossible to tell which model is the most powerful, the most affordable, or the most up-to-date.

For instance:

| Model Name | Key Trait/Use |

|---|---|

| GPT-4.1 | An improvement on GPT-4, but not GPT-5 |

| GPT-4 Turbo | Faster and cheaper variant of GPT-4 |

| GPT-4o | “Omni” version focused on multimodal input |

| o3-mini | Small, fast model with limited context window |

| o4-mini-high | Enhanced version of o4-mini, details unclear |

Even experienced users are left guessing what each name means in terms of performance, size, and functionality.

Altman and OpenAI Say They’ll Fix It

The confusion reached such a point that OpenAI CEO Sam Altman publicly acknowledged the mess. In a recent post, he said, “How about we fix our model naming by this summer and everyone gets a few more months to make fun of us (which we very much deserve) until then?” This open admission reflects how deeply the issue has resonated with users across the board.

Even Kevin Weil, OpenAI’s Chief Product Officer, called the naming convention “absolutely atrocious” during a podcast appearance. There’s clearly a consensus — inside and outside the company — that something has to change.

Why It Matters

Naming might sound like a minor issue, but it has real implications:

- For developers: It adds friction to workflows when APIs and documentation don’t clearly define capabilities by name.

- For businesses: It makes choosing the right model for a product or tool more difficult, impacting budgets and performance.

- For everyday users: It leads to confusion about what they’re interacting with and what features are available.

Poor naming also creates a marketing problem. When models with major technical differences are labeled almost identically, OpenAI misses the opportunity to highlight the advances they’ve made — like reduced latency, better multimodal understanding, or improved memory.

A Better Future for AI Model Names?

According to reports, OpenAI is planning to simplify its product lines with the upcoming launch of GPT-5. Rumored to be more unified under the hood, GPT-5 may feature automatic model selection, where the system decides behind the scenes which version to use based on your query. That could reduce the need for users to even think about the model name at all — a move that mirrors how consumers interact with everyday tech like search engines or digital assistants.

Still, until that transition happens, users are stuck navigating a messy landscape of near-identical model names that lack any clear hierarchy.

As the AI space matures, naming models might seem like a small detail — but it’s a crucial part of making powerful tools accessible, trustworthy, and usable at scale. Until OpenAI delivers on its promise to clean up the chaos, the community will continue to joke, vent, and guess their way through version soup.

Key Takeaways

- ChatGPT’s model names are now hard for most people to understand.

- The confusing naming affects both everyday users and experts.

- Better naming would make it easier for everyone to keep up with changes.

Decoding ChatGPT’s Model Naming System

OpenAI’s names for its AI models have grown more complicated in recent years, leaving many users confused. With each new release of ChatGPT and other large language models, it’s become harder to tell apart the capabilities and features of each version.

Evolution of Model Names

OpenAI started with simple names like GPT, GPT-2, and GPT-3. These early models followed a clear pattern. The numbers increased as the technology advanced.

After GPT-3, things became less straightforward. OpenAI released models such as GPT-3.5 and then GPT-4. Recently, the system introduced names that include a letter and number combination—like “o1″—which many users find confusing.

Sam Altman, OpenAI’s CEO, even joked that the company needs to “fix” its naming by summer 2025 because people have had trouble following the updates. This shift away from simple, sequential numbers has made it harder for many to know what each release offers, or how it compares to other versions. The confusion is talked about on forums and social media, with users often struggling to identify the differences between models by name alone. More on OpenAI model naming confusion.

Key Differences Between Model Versions

Each major ChatGPT release brings changes in performance, understanding, and safety tools. For example, GPT-3 improved text generation, while GPT-4 offered stronger reasoning and broader knowledge.

Previously, users could look at the version number to guess a model’s features or age. The new naming system, which includes less-obvious elements like small letters (“o1” or “o4”), provides little hint about improvements or intended use.

These models may differ in speed, cost, accuracy, or support for new plugins. Key updates might only be clear in technical documents rather than the model’s name. This makes it difficult for users to choose the right AI technology for their needs without checking detailed release notes or reviews.

Common User Confusions

Many users think that OpenAI’s current naming approach is unclear. Longtime users, AI developers, and new customers struggle to tell one model apart from another because names no longer reflect obvious changes or improvements.

On many community forums, users compare OpenAI’s latest naming conventions to a “maze” or a “code.” Questions like “What’s the difference between GPT-4 and o1?” are common. Some even say the new system makes it easy to pick the wrong model by mistake.

- Main points of confusion include:

- No clear order or sequence in names

- Letters or additional symbols without meaning

- Difficulty identifying version upgrades

Because of this, many hope OpenAI will change its approach and make it easier to understand large language model versions in the future. You can see more examples of these issues discussed in the OpenAI Developer Forum and other user communities.

Impacts on Users and Developers

Changes to ChatGPT’s naming system have led to confusion. This creates barriers for users, teachers, and programmers trying to keep up with updates, discover new features, or rely on consistent context and learning resources.

Impediments to Learning and Education

Educators and students now struggle to follow updates or select the right ChatGPT version for their lessons. Many guides or tools reference outdated names, making it unclear what model supports which features.

It can be hard for those creating curriculum or learning materials. Sudden shifts in names or unclear model labels mean resources can become obsolete overnight. Consistency in naming is key for effective learning.

Some teachers have to spend extra time explaining new naming conventions instead of focusing on the subject. For learners, it becomes difficult to practice with the correct tools.

| Issue | Impact |

|---|---|

| Name changes | Outdated instructions and confusion |

| Unclear versions | Harder lesson planning |

| Feature uncertainty | Difficult tool selection |

Challenges in Integration and Programming

Developers face extra hurdles when integrating ChatGPT into their apps. Each model update brings new names, breaking existing code or leading to errors. Integration guides can quickly become outdated.

Automation scripts might fail if they call an old model name. Programmers then need to track updates more closely, spending time on maintenance instead of building new features.

Custom instructions are sometimes ignored with newer models, as reported by users after updates. This means developers must reevaluate how to use custom settings and test all behavior again. Some have shared concerns on forums about integration challenges after updates.

Ambiguity in Utility and Feature Discovery

Frequent or unclear name changes make it difficult to know what each model can do. Documentation and feature lists often fail to keep up with every naming switch.

Users might not realize newer models offer different context limits, tools, or performance. Some miss out on improvements because the naming doesn’t reflect added features or changed utility. This leads to time wasted exploring models with guesswork.

People relying on ChatGPT for tasks like summarizing, coding, or data review sometimes use less capable versions. They may not notice simply because the model’s name no longer signals its strengths, leading to missed opportunities to improve workflow and results.

Broader Consequences for Artificial Intelligence Ecosystem

Confusion over ChatGPT’s naming system can create uncertainty across the artificial intelligence field. This impacts how users, companies, and researchers understand and adopt new models.

Implications for Trust and Adoption

Clear model names help people know what a tool can do and what its limits are. When names get confusing, users and businesses may start to question which version they are using, how recent it is, or if it fits their needs.

Big companies like Microsoft rely on knowing the details and strengths of each artificial intelligence model before using them in Office, Bing, or Copilot. If users can’t tell which ChatGPT model is running under the hood, trust in those services may fall.

Unclear naming can lead to hesitation when adopting new tools. Users might delay switching to the newest model out of worry that it will not match their needs or break older workflows. As a result, innovations in machine learning may not reach their full potential.

Reactions from Big Tech and Academia

Big Tech companies like Microsoft, Google, and Amazon want transparency. When the naming system lacks clarity, they may pressure OpenAI and similar firms for better explanations. They need to make sure AI models work as expected and stay up to date.

Institutions such as MIT and other universities depend on consistent model naming for machine learning research and coursework. If students or scientists can’t match their work to specific model versions, research results can become unreliable. Academic papers might struggle to compare results, and classes can have outdated or mixed instructions.

This breakdown in clarity lowers the value of collaboration across the industry and slows progress. Both industry and academia need shared standards to keep artificial intelligence open and useful to all.

Effects on Legal Advice and Human Values

A model’s identity can impact legal questions. If the naming is unclear, lawyers may find it hard to figure out which model made each decision or recommendation. This causes issues when trying to resolve disputes or check if rules were followed.

Legal advisors working with big tech firms face added difficulty ensuring compliance and accountability. Government regulators may ask for records of which model versions were used during key events.

Human values such as fairness and safety are also at risk. If users can’t confirm which model produced a result, it’s hard to review decisions for bias, errors, or harmful outcomes. This makes it more difficult for organizations to make sure artificial intelligence aligns with public expectations and legal requirements. For more depth about transparency and its broader impact on AI credibility and adoption, visit ScienceDirect.

Comparison With Other AI Naming Conventions

Many AI fields face similar issues with naming, such as unclear labels and confusing numbering. These problems can impact understanding, trust, and even security around artificial intelligence.

Lessons From Self-Driving Cars and Chatbots

Self-driving car companies like Waymo and Tesla use more direct naming for self-driving features. For example, Tesla calls its system “Autopilot,” which is simple and easy to remember. New updates are numbered or given clear names, such as “Full Self-Driving Beta.” This helps both consumers and experts keep track of different versions and features without much confusion.

Chatbots, including those built by Google and Microsoft, often follow straightforward naming. Google Assistant and Microsoft’s Copilot use clear, branded names instead of codes or version numbers. In contrast, ChatGPT model names like “o3” or “o4-mini-high” create confusion, as highlighted by many users who compare it to the tangled AI model naming of OpenAI’s tools.

By sticking with direct names and sequential numbering, other areas like self-driving technology and digital assistants make it easier for users to understand updates.

The Role of Neural Networks and Grammar

Neural network models are often named using terms from linguistics—like “transformers,” “encoders,” or “decoders”—to describe their functions. Some companies add a smart touch by including language-related words or grammar hints in their naming. For example, the BERT model by Google stands for “Bidirectional Encoder Representations from Transformers.”

However, the trend toward shortened codes, mixed capitalization, and random numbers (for example, “o4-mini-high”) makes it hard for the public to guess what the model does or how it improves over previous versions. Simple grammar or descriptive terms help people understand model intelligence and purpose compared to less descriptive names which hide the neural network’s role or upgrades.

Table of Examples

| Model Name | Style | Clarity |

|---|---|---|

| BERT | Descriptive | High |

| Autopilot (Tesla) | Functional | High |

| o4-mini-high | Coded | Low |

Risks of Confusion and Intellectual Property Theft

Confusing naming systems create bigger risks than just misunderstanding. When AI models are hard to tell apart, it gets easier for bad actors to copy, repackage, or steal ideas under new names. It can also make it harder to trace which neural network version is responsible for certain actions if a problem arises.

In the self-driving car industry, clear names reduce liability questions and make it easier to identify what kind of intelligence or features each product contains. For chatbots and models like ChatGPT, missing or poorly applied grammar and unclear names can hide important details about security, allowing hackers to abuse gaps in the naming system.

OpenAI’s own CEO has acknowledged this problem, promising to “fix” the model naming confusion to protect both the product and its users from mistakes and potential theft.

Frequently Asked Questions

ChatGPT model names and versions often confuse both new and longtime users. The system for naming these AI models changes often and can be hard to follow without a clear guide.

What is the rationale behind the naming conventions used for ChatGPT’s new models?

Naming often reflects internal development stages or unique features. Some names may include letters, numbers, or code names that are not always clear to the public. As updates come quickly, the reasons behind certain names are rarely made public or explained in depth.

How does the versioning system of ChatGPT models correspond to their features and improvements?

Version numbers sometimes increase with big updates that add new abilities or fix large problems. However, not all small updates receive clear version numbers. This inconsistency makes it hard to track what each model really offers compared to the last.

Can you explain the differences between the various generations of ChatGPT models?

Each major generation introduces shifts in speed, accuracy, or understanding of questions. For example, some generations are much better at handling longer conversations, while others focus on faster responses or fewer mistakes. These improvements are often listed in tech announcements, but the names themselves do not always make these changes obvious.

Is there a comprehensive guide available for understanding the progression of ChatGPT model updates?

Online communities and news sites sometimes publish guides about ChatGPT models and their updates. However, a single official source with all changes and naming details is hard to find. Most information comes from scattered posts or help forums.

What are the key factors that influence the naming of new ChatGPT models?

Developers consider internal coding systems, research updates, and marketing needs. Sometimes models get names based on technical upgrades. Other times, the names are chosen for product launches but may lack a pattern, adding to the confusion.

How can users keep track of ChatGPT’s model updates and their respective capabilities?

Staying up to date usually means following forums, AI news portals, or official blog posts. Users can also check community discussions for reports on new versions and features. There is no single, trusted dashboard that always lists every update as soon as it happens.