OpenAI’s DALL-E series represents a significant leap in AI-powered image generation technology. DALL-E, introduced in January 2021, was the first iteration that showcased the ability to create images from text descriptions. DALL-E 3, released in 2023, offers dramatically improved capabilities over its predecessors, with enhanced understanding of nuance and detail that enables users to translate complex ideas into remarkably accurate images.

The evolution from DALL-E to DALL-E 2 brought major improvements in image resolution, realism, and contextual understanding. DALL-E 2, launched in April 2022, could generate more realistic images with better comprehension of spatial relationships and abstract concepts. The system also introduced features like inpainting and outpainting, allowing users to edit existing images or extend them beyond their original boundaries.

Comparing the models reveals substantial progression in image quality and prompt interpretation. DALL-E 3 demonstrates vastly superior performance when handling detailed prompts compared to DALL-E 2, with noticeably better rendering of human figures, complex scenes, and text within images. This advancement makes the technology increasingly valuable for creative professionals, content creators, and businesses seeking visual solutions without extensive design resources.

Understanding DALL·E: Evolution and Capabilities

DALL·E is OpenAI’s revolutionary AI-powered image generation model that transforms text descriptions into highly detailed visuals. Since its inception, the model has undergone multiple upgrades, each improving quality, coherence, and creativity. This guide breaks down the major versions—DALL·E, DALL·E 2, and DALL·E 3—highlighting their strengths, weaknesses, and how they compare.

How DALL·E Works

At its core, DALL·E operates on a deep learning model called a generative adversarial network (GAN) combined with a transformer-based architecture. It understands prompts by breaking them down into visual elements and composing an image accordingly. As newer versions were released, the model improved in areas like:

- Image Quality: Each version enhances realism, detail, and resolution.

- Text Interpretation: DALL·E 3, in particular, understands complex prompts far better than its predecessors.

- Coherence & Accuracy: Earlier models struggled with logical consistency (e.g., correct placement of body parts or following specific artistic styles), but improvements over time have made images more refined and visually accurate.

Now, let’s compare the three major versions side by side.

DALL·E vs. DALL·E 2 vs. DALL·E 3: A Feature Breakdown

| Feature | DALL·E (Original) | DALL·E 2 | DALL·E 3 |

|---|---|---|---|

| Release Year | 2021 | 2022 | 2023 |

| Image Quality | Low to moderate detail | Sharper, improved realism | Highly detailed, near-photorealistic |

| Text Understanding | Limited | Better interpretation | Exceptional accuracy with long prompts |

| Image Resolution | 256×256 pixels | 1024×1024 pixels | 1024×1024 and larger |

| Artistic Style Variety | Limited | Good range of styles | Extremely flexible, including fine-tuned art directions |

| Handling of Faces & Hands | Often distorted | Improved but imperfect | Much better accuracy |

| Prompt Following | Struggles with complex prompts | Decent but with inconsistencies | Nearly perfect adherence to detailed prompts |

| Editing Capabilities | Basic, lacks inpainting | Introduced inpainting (editing parts of an image) | Advanced inpainting, interactive refinement |

| AI Ethics & Safety | Minimal filtering | Better safeguards against biases | Strong content moderation and bias reduction |

| Ideal For | Experimental fun, simple prompts | More refined creative projects | Professional-level artwork and marketing visuals |

Key Improvements Over Time

DALL·E 2: A Major Leap Forward

DALL·E 2 made significant improvements over the first version by enhancing resolution, coherence, and overall artistic ability. One of its biggest advancements was inpainting, allowing users to edit parts of an image with AI-generated elements. This version also improved at generating human faces and more complex compositions, though occasional distortions still appeared.

DALL·E 3: The Game Changer

DALL·E 3 brought the most dramatic leap in AI-generated imagery. It fully understands complex prompts, following instructions with near-perfect accuracy. It can create intricate, high-resolution artwork, making it a viable tool for designers, marketers, and digital artists. Additionally, OpenAI implemented stronger content moderation, preventing the model from generating harmful or misleading images.

DALL·E 3 vs. Other AI Image Models

While DALL·E 3 is a leader in AI art generation, it faces competition from models like Midjourney, Stable Diffusion, and Adobe Firefly. Here’s how DALL·E 3 stacks up:

- Midjourney excels at artistic stylization but is less precise in following text prompts.

- Stable Diffusion is highly customizable and open-source but requires technical expertise.

- Adobe Firefly integrates seamlessly into Photoshop and other Adobe tools but is more niche-focused.

DALL·E 3 stands out for ease of use, accuracy in text-to-image generation, and its balance between realism and artistic flexibility.

What’s Next for DALL·E?

Given the rapid evolution of AI models, future versions of DALL·E could include:

- Higher resolutions with even more photorealistic details.

- Interactive generation, where users can tweak images dynamically.

- Better storytelling capabilities, allowing for multi-image sequences.

- Real-time image synthesis, making AI art even more responsive.

Rumors About Dall-E 4

OpenAI has not officially announced the development or release of DALL·E 4. The most recent version, DALL·E 3, was released in late 2023, introducing significant advancements in image quality and prompt comprehension.

While there is no concrete information about DALL·E 4, the AI community has speculated on potential features and improvements that could be included in a future release:

- Enhanced Prompt Understanding: Building upon DALL·E 3’s capabilities, future iterations might interpret even more complex and nuanced prompts, allowing for more precise image generation.

- Higher Resolution Outputs: An increase in image resolution could provide more detailed and photorealistic visuals, catering to professional and creative industries.

- Improved Text Generation Within Images: Advancements may enable the model to generate legible and contextually appropriate text within images, enhancing applications like graphic design and advertising.

- Ethical and Safety Enhancements: Ongoing improvements in content moderation and bias reduction are likely to ensure responsible AI usage.

These anticipated features are based on the trajectory of AI development and community discussions. However, without official confirmation from OpenAI, the specifics of DALL·E 4 remain speculative.

Key Takeaways

- DALL-E has evolved from a basic text-to-image model to DALL-E 3, which offers unprecedented accuracy in translating complex text prompts into detailed visuals.

- Each iteration brings significant improvements in image quality, with DALL-E 3 showing superior understanding of nuance, spatial relationships, and human anatomy.

- The technology continues to transform creative workflows while raising important questions about AI-generated content’s accessibility, ethical use, and impact on traditional art.

Evolution of AI Image Generation

DALL·E has revolutionized AI-generated art, pushing the boundaries of creativity and automation. Whether you’re a casual user exploring AI’s capabilities or a professional leveraging it for design, understanding the differences between DALL·E, DALL·E 2, and DALL·E 3 can help you choose the right tool for your needs. As AI art continues to evolve, the potential for new, groundbreaking creative applications is limitless.

AI image generation has undergone remarkable transformation through OpenAI’s DALL-E series. These models represent significant leaps in how machine learning systems interpret text prompts and convert them into visual content.

From DALL-E to DALL-E 2 and DALL-E 3

The original DALL-E, named after artist Salvador Dali and the game Pong, established the foundation for AI art creation. It demonstrated how deep learning could generate images from text descriptions, though with limitations in detail and consistency.

DALL-E 2, released as a research project before entering beta, marked a substantial improvement. This version produced more coherent and detailed images but still struggled with complex prompts and sometimes created vague, poorly blended visuals.

DALL-E 3 represents OpenAI’s most advanced iteration. The leap from DALL-E 2 to 3 is significant—images from DALL-E 3 appear more professional and artistic. The model better understands nuanced prompts and maintains stronger visual consistency.

Technological Advances in AI Systems

The evolution of these models reflects broader advances in neural network architecture and transformer technology. Each version employs increasingly sophisticated deep learning techniques to process and interpret language.

DALL-E 3 features enhanced training methods that improve understanding of context and intent in text prompts. This allows for more precise interpretation of detailed requests.

Safety features have evolved alongside capabilities. DALL-E 2 introduced limitations on generating violent, hateful, or adult content by filtering training data. DALL-E 3 further refined these protections while adding safeguards against generating images of public figures.

The progression also shows improvements in visual consistency, with DALL-E 3 maintaining better coherence across elements within generated images compared to earlier versions.

Understanding DALL-E

DALL-E is an AI system developed by OpenAI that generates images from textual descriptions. It represents a significant advancement in artificial intelligence’s ability to understand and visualize abstract concepts and detailed instructions.

Core Capabilities of DALL-E

DALL-E excels at transforming text into visual content with remarkable accuracy. The system can generate images based on specific descriptions, combining multiple concepts, styles, and elements into cohesive visuals.

Each version has shown significant improvements in image quality. DALL-E 2 produces higher resolution images with more realistic details compared to its predecessor. DALL-E 3 further enhances this capability with even more precise interpretations of complex prompts.

The AI can understand abstract concepts and nuanced descriptions. For example, it can visualize “a teddy bear on a skateboard in Times Square” or “an astronaut playing basketball on the moon” without prior examples of these specific scenarios.

DALL-E’s technology enables creative applications across marketing, art, design, and education. It helps professionals visualize concepts quickly without advanced graphic design skills.

Limitations and Feedback

DALL-E has built-in safety restrictions to prevent harmful content generation. OpenAI has implemented measures to limit the creation of violent, hateful, or adult images by removing explicit content from training data.

Each version addresses previous limitations. DALL-E 3 includes enhanced safety protocols compared to DALL-E 2, with specific restrictions on generating images of public figures by name.

User feedback has shaped DALL-E’s development significantly. OpenAI partnered with “red teamers” – experts who stress-test the system – to identify risks and improve safety measures related to propaganda and misrepresentation.

The systems sometimes struggle with complex spatial relationships or very specific details in textual descriptions. Users may need to refine prompts to achieve desired results.

DALL-E’s interface has evolved based on user experience. DALL-E 3 offers a more refined interface with real-time feedback and intuitive controls, allowing for greater artistic customization compared to earlier versions.

DALL-E 2: Advancements and Differences

DALL-E 2 represented a significant leap forward from its predecessor, with dramatically improved image generation capabilities and expanded practical applications. The system brought higher resolution outputs, more accurate text interpretation, and a revised content policy that balanced creative freedom with ethical considerations.

Improved Image Quality and Resolution

DALL-E 2 revolutionized AI-generated imagery with substantially enhanced output quality compared to the original DALL-E. Images created by DALL-E 2 feature 4x higher resolution, producing more detailed and realistic results.

The system adopted a diffusion model approach, moving away from the limitations of its predecessor. This technical shift enabled DALL-E 2 to generate images with more accurate proportions and better understanding of spatial relationships.

Text interpretation also saw major improvements. DALL-E 2 demonstrates a stronger ability to understand complex descriptive prompts and translate them into coherent visual elements.

The model excels at creating photorealistic imagery, particularly with natural scenes, objects, and some human representations. Its enhanced capabilities allow for more nuanced art styles and compositions.

Expanded Content Policy and Use-Cases

OpenAI implemented a more flexible content policy with DALL-E 2, allowing for broader creative applications while maintaining ethical safeguards. This balanced approach opened the platform to more professional use-cases.

The system found applications in fields like graphic design, content creation, and digital art. Many professionals incorporated DALL-E 2 into their workflows for concept visualization and creative ideation.

Despite expanded permissions, DALL-E 2 maintained restrictions on generating violent, explicit, or potentially harmful content. OpenAI implemented these guardrails to prevent misuse while still enabling artistic expression.

The improvements in DALL-E 2 made AI-generated imagery more accessible to non-technical users. Its integration with other OpenAI products created new opportunities for creative professionals to incorporate AI assistance into their work.

Introducing DALL-E 3 and Its Innovations

DALL-E 3 represents a significant advancement in AI image generation technology from OpenAI. This latest iteration offers remarkably improved text comprehension and image fidelity compared to its predecessors.

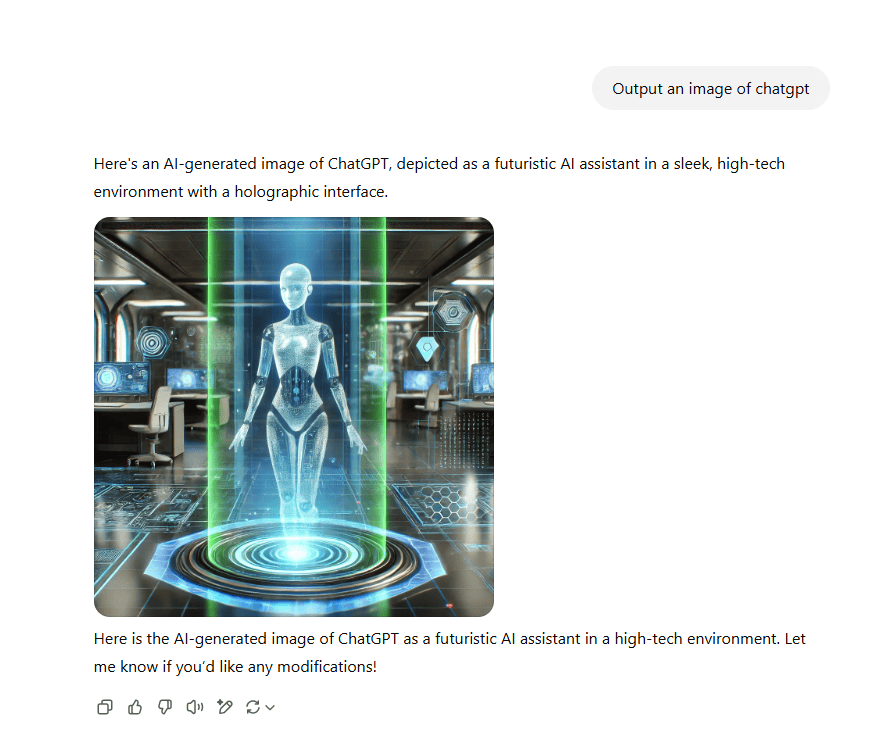

Integration with Other AI Models

DALL-E 3 integrates seamlessly with ChatGPT, creating a powerful AI ecosystem that enhances user experience. This integration allows ChatGPT Plus and Enterprise users to generate images directly within conversations. Users can describe their desired images in natural language, and ChatGPT helps refine those descriptions to produce optimal results.

The collaboration between these AI systems creates a more intuitive creative process. Users don’t need specialized knowledge of prompt engineering to get impressive results. ChatGPT acts as an intermediary that translates vague concepts into detailed prompts that DALL-E 3 can interpret accurately.

This integration also improves accessibility to AI image generation. The conversational interface makes the technology more approachable for users without technical backgrounds.

Responsible Use and Harmful Generations

OpenAI implemented robust safety measures in DALL-E 3 to prevent misuse. The model includes specific mitigations to decline requests for generating images of public figures by name, reducing the risk of creating misleading content.

The development process involved collaboration with red teamers—domain experts who stress-tested the model in various risk scenarios. These efforts focused on identifying and addressing potential issues related to propaganda and harmful visual representations.

DALL-E 3 also features improved safety performance in addressing visual biases. The system works to avoid both over-representation and under-representation in its generated images, creating more balanced and fair visual outputs.

These safety measures reflect OpenAI’s commitment to responsible AI development. The company continues to refine its approach to harmful content generation, balancing creative capabilities with ethical considerations.

Practical Applications and Use-Cases

DALL-E models have transformed how we approach visual content creation by converting text prompts into detailed images. The technology offers significant value across multiple industries from marketing to education, with each version bringing enhanced capabilities.

Content Creation and Media

DALL-E has revolutionized content creation workflows for marketers, designers, and social media managers. These professionals can quickly generate custom illustrations, social media graphics, and marketing materials by simply describing what they need.

The technology excels at creating unique visuals for blog posts, advertisements, and promotional materials without requiring advanced design skills. Content creators can test multiple visual concepts in minutes rather than hours.

For media companies, DALL-E helps visualize news stories, create conceptual illustrations, and produce visuals when photography isn’t possible or practical. This capability is particularly valuable for tight deadlines or abstract topics.

Digital publishers use DALL-E 3’s text integration feature to create images with embedded text that appears naturally within the scene, perfect for memes, quotes, and informational graphics.

Design and Product Prototyping

Product designers leverage DALL-E to rapidly prototype concepts before investing in physical mockups. This accelerates the design process and reduces development costs significantly.

The technology allows designers to:

- Generate multiple design variations quickly

- Visualize products in different contexts and environments

- Test color schemes and styling options

- Create realistic product renderings for client presentations

Interior designers use DALL-E to show clients how furniture arrangements might look in their spaces. Fashion designers can quickly visualize new patterns, styles, and collections.

Architectural firms employ the technology to create concept visualizations for proposed buildings and spaces, helping clients better understand design proposals before construction begins.

Educational and Creative Storytelling

Educators have embraced DALL-E to create visual aids that make complex concepts more accessible. Science teachers can generate images showing natural processes, while history teachers can create visual representations of historical events.

The technology helps produce:

- Custom illustrations for educational materials

- Visual explanations of abstract concepts

- Culturally diverse representations in teaching materials

- Accessible learning content for different learning styles

Authors and storytellers use DALL-E to visualize characters, settings, and scenes from their narratives. This capability proves especially valuable for independent publishers with limited illustration budgets.

Game developers utilize the technology to create concept art and environmental designs in early development stages. Film studios employ it for storyboarding and visualizing scenes before committing to expensive production.

Accessibility and Subscription Models

DALL-E models have evolved in their accessibility and pricing structures, creating different options for users with varying needs and budgets.

Availability for Content Creators and Designers

DALL-E has democratized access to AI image generation for many content creators and designers. The latest model, DALL-E 3, offers significant improvements in image quality and prompt understanding compared to its predecessors. This advancement enables small businesses and independent creators who cannot afford professional design services to create unique visual content.

Users can access DALL-E through multiple channels. It’s available through OpenAI’s platform directly and integrated into other services like Azure OpenAI Service, which provides enterprise-level access with additional security features.

The technology has removed barriers to custom graphic design, allowing users without technical expertise to generate complex visuals through simple text prompts.

Subscription Tiers and Limitations

OpenAI offers several subscription options for accessing DALL-E models:

Free Tier:

- Limited number of generations per month

- Basic access to the technology

- Lower priority during high usage periods

Paid Subscriptions:

- Higher generation limits

- Priority access

- Additional features like higher resolution outputs

Each tier comes with specific limitations. Free users face stricter rate limits and may encounter queues during peak times. Premium subscribers gain faster processing and more generous usage allowances.

DALL-E models also have built-in safety limitations. The systems include mitigations to prevent generation of violent, hateful, or adult content. DALL-E 3 specifically includes additional restrictions that decline requests for generating public figures by name.

These safety guardrails balance creative freedom with responsible use of the technology.

Ethical Considerations in AI-Powered Image Generation

AI image generators like DALL-E raise important ethical questions about content creation, representation, and potential misuse. These tools require careful policies to balance creative freedom with responsible use.

Content Policies and Political Content

OpenAI has implemented strict content policies for DALL-E models to address concerns about political manipulation. These policies restrict the generation of images featuring recognizable political figures or politically sensitive content that could be used for misinformation campaigns.

The restrictions represent a challenging balance between creative freedom and preventing harmful misuse. Critics argue these policies sometimes limit legitimate artistic and journalistic expression. For example, DALL-E 3 won’t generate images of any politicians, even in neutral or historical contexts.

The debate intensifies as these technologies improve. Should AI companies serve as content gatekeepers? This question becomes more complex as these tools become widely available to the public, potentially influencing political discourse and public opinion.

Avoiding Harmful or Misleading Generations

DALL-E models include safeguards to prevent generation of violent, adult, or hateful content. OpenAI continuously updates these filters based on user feedback and emerging issues.

The systems also aim to prevent the creation of deepfakes that could harm individuals’ reputations or spread misinformation. This includes refusing to generate photorealistic images of specific individuals without consent.

Technical measures include invisible watermarks on DALL-E images to help identify AI-generated content. This addresses concerns about authenticity in an era where distinguishing human-created from AI-created images grows increasingly difficult.

There’s also ongoing work to reduce bias in image generation. Early versions of DALL-E showed tendencies to reinforce stereotypes about gender, race, and professions. OpenAI has worked to improve these issues, though challenges remain in creating fully fair and representative systems.

Future Prospects of Text-to-Image AI Models

As text-to-image AI models like DALL-E continue to evolve, they promise to reshape creative industries and open new possibilities for both professional and everyday applications.

Ongoing Research and Development

Research teams are actively working to address current limitations in text-to-image models. One major focus is improving the understanding of complex prompts and spatial relationships, allowing for more precise generation of requested elements.

Researchers are also tackling ethical concerns through better content filtering systems. These systems aim to prevent the creation of harmful imagery while still allowing artistic expression.

Technical improvements are expected in image resolution and consistency. Future models will likely generate higher quality images with fewer artifacts and better coherence across elements.

The integration with other AI systems represents another frontier. Combining text-to-image capabilities with video generation, 3D modeling, and interactive applications could create powerful new creative tools.

Potential for New Creative Domains

Text-to-image AI is expanding beyond digital art into practical applications across multiple industries. In fashion design, AI models can generate clothing concepts and pattern variations, helping designers explore ideas before production.

Medical imaging may benefit from AI-generated visualizations for educational materials and treatment planning. These tools could help doctors communicate complex conditions to patients through clear visual representations.

Product development teams are using AI-generated imagery to rapidly prototype concepts. This speeds up the design process by visualizing multiple variations quickly.

Experimental artistic communities are embracing these tools for new forms of expression. The collaboration between human creativity and AI capabilities is creating entirely new aesthetic approaches.

Publishing and marketing professionals increasingly use text-to-image AI to create consistent visual branding without extensive graphic design resources. This democratizes high-quality visual content creation.

Frequently Asked Questions

DALL-E has evolved significantly across its different versions, with each iteration bringing substantial improvements in image quality, prompt understanding, and user experience.

How do the capabilities of DALL-E 3 differ from those of its predecessors?

DALL-E 3 demonstrates a superior understanding of text prompts compared to earlier versions. The model can interpret complex instructions with greater accuracy and produce images that more closely match user intentions.

DALL-E 3 handles abstract concepts and combinations of ideas more effectively than DALL-E 2. Users can describe intricate scenes or visual metaphors and receive results that capture these nuances with remarkable precision.

The newer model also shows improved handling of text within images. While DALL-E 2 often struggled with rendering readable text, DALL-E 3 can generate coherent and legible text elements within its creations.

What improvements have been made to image resolution in DALL-E 3 compared to DALL-E 2?

DALL-E 3 generates images with noticeably higher resolution and detail than DALL-E 2. This enhancement allows for more intricate textures, sharper edges, and finer details in the generated artwork.

The improved resolution particularly benefits complex scenes with multiple subjects or elements. Background details that might have appeared blurry or simplified in DALL-E 2 now maintain clarity and definition in DALL-E 3.

Color reproduction has also improved significantly. DALL-E 3 produces more vibrant, accurate, and consistent colors across the entire image compared to its predecessor.

Can you explain how the user interface of DALL-E 3 operates?

DALL-E 3 features a streamlined interface that focuses on natural language prompting. Users can enter detailed descriptions of their desired images without needing to learn specialized prompt engineering techniques.

The system provides multiple image variations for each prompt, giving users more options to choose from. Users can also refine their prompts based on initial results to guide the AI toward their vision.

Integration with ChatGPT allows users to develop their image concepts through conversation. This interactive approach helps users clarify their ideas and receive guidance on effective prompt writing.

What are the limitations or disadvantages of using DALL-E 2 for image generation?

DALL-E 2 struggles with accurately rendering human faces and proportions. Facial features often appear distorted or asymmetrical, particularly when generating realistic human portraits.

The model has difficulty understanding complex spatial relationships and sometimes misinterprets positional instructions in prompts. Users frequently find that elements are incorrectly placed or oriented within the scene.

Text rendering is a significant weakness in DALL-E 2. The model typically produces gibberish or garbled text when asked to include writing within images, limiting its usefulness for designs requiring legible text.

In what ways does DALL-E 3’s handling of aspect ratios enhance its performance over previous models?

DALL-E 3 offers flexible aspect ratio options that weren’t available in earlier versions. Users can specify portrait, landscape, or square formats to better suit their specific needs.

The model maintains consistent quality across different aspect ratios. DALL-E 2 often struggled with non-square formats, but DALL-E 3 produces equally detailed and coherent images regardless of the chosen dimensions.

This flexibility makes DALL-E 3 more practical for creating images for various platforms and media. Users can generate content optimized for websites, social media, print materials, or mobile applications without quality compromise.

How does the number of parameters in DALL-E 3 impact its image generation capabilities?

DALL-E 3’s increased parameter count enables it to capture more complex relationships between text and visual elements. This translates to better semantic understanding and more accurate interpretation of prompts.

The larger model size allows DALL-E 3 to draw from a broader range of visual concepts and styles. This expanded knowledge base results in more diverse and creative outputs compared to smaller models.

Processing more parameters does require additional computational resources. However, OpenAI has optimized the model to deliver improved performance without proportionally increasing generation time for end users.